Preoccupations

This section outlines my compositional preoccupations, which I divide into two sections. The first section, [2.1 Technology], outlines my engagement with technology in composition, and the second section, [2.2 Aesthetics], discusses the aesthetic principles that guide my compositional tendencies and decision-making processes.

Technology

This research is concerned with my computer-aided composition practice using content-aware programs. Therefore it is essential to outline my technological preoccupations including software and development methodology and the relationship that computing has to my creative workflow. Firstly, I outline the nature of computer-aided composition in my practice, establishing how my approach is novel amongst wider convention. Secondly, I discuss the concept of an iterative development cycle that underpins the creation of new computer programs and the construction of artistic works as an entangled process. This will touch on notions of feedback in my compositional process as well as bricolage programming.

Computer-Aided Composition

Computer-aided composition software provides composers with tools and formalisms for the generation or transformation of musical material (Assayag, 1998, p. 1). Numerous software programs exist for computer-aided composition and no two programs service the exact same set of creative interests. These programs also carry the influences and intentions of the developers in their design, architecture and use which lends them to different compositional workflows and ways of approaching the creation of a musical work.

For example, OpenMusic (OM) facilitates the manipulation of data by assembling and connecting “functional boxes and object constructors” (Bresson et al., 2017, p. 1). A collection of objects and the connections between them form a patch where various data structures can be manipulated in order to generate and control musical information, for example, pitch, rhythmic durations or spectral envelopes. OM also possesses the concept of a maquette — “a hybrid visual program /sequencer allowing objects and programs to be arranged in time and connected by functional relations” (Bresson et al, 2017, p. 4). The maquette helps users in imposing dynamic temporal manipulations onto the connections between the objects that transform and generate data giving another level of meta-control over those processes.

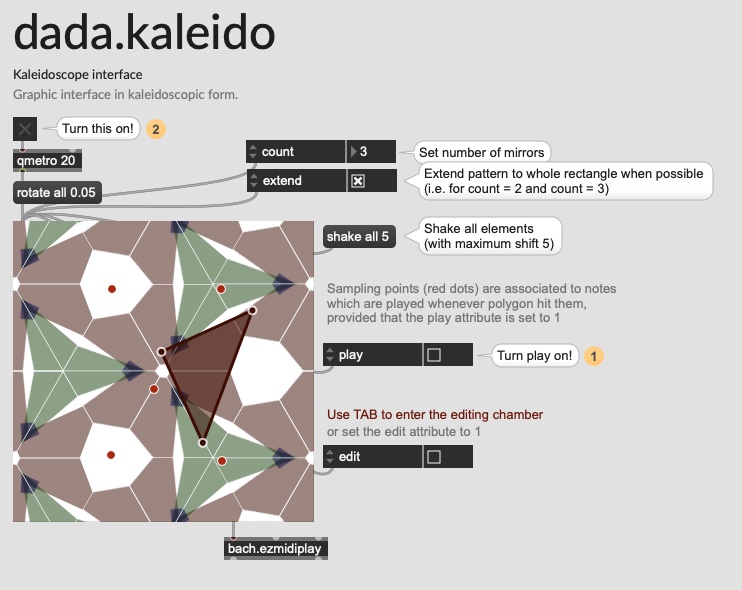

The Bach suite of Max packages (Bach, Cage, Dada) by Daniele Ghisi and Andrea Agostini facilitate a wide range of possibilities for computer-aided composition. The suite leverages Max and its visual programming style. Like OM, objects with specific functionality are connected together in order to transform data or to realise it through scores or audio playback. Bach is the core package in the suite, providing the primitives for these types of operations to be constructed. Cage builds on Bach by re-using much of the underlying code and packaging ready-to-use modules that deal with 20th and 21st century compositional techniques and concepts such as indeterminacy and chance. Dada is an increasingly abstract set of objects that again build on the underlying code from Bach, while bringing a number of non-standard graphical user interface objects to the fore. These visual entities are experimental in nature and offer novel metaphors for the manipulation of sound based on geometry, recreational mathematics and physical modelling (Ghisi, n.d). IMAGE 2.1, depicts the dada.kaleido object which emulates the shifting geometry patterns of a kaleidoscope. The data from this object can be used as triggers or controls for any number of musical parameters.

Strasheela is another piece of software for computer-aided composition that enables users to express musical ideas as “constraint-based music composition systems”. The user declares their theory as a set of constraints and the computer generates music that “complies with this theory” (Anders, n.d). In documentation of Strasheela an example is provided where a passage of music is generated that conforms to the rules of Fuxian first species counterpoint (http://strasheela.sourceforge.net/strasheela/doc/Example-Realtime-SimpleCounterpoint.html). Another example exists in which a Lindenmayer system drives the parametric creation of musical motifs (http://strasheela.sourceforge.net/strasheela/doc/Example-HarmonisedLindenmayerSystem.html).It is evident even from these didactic examples that Strasheela is flexible and can produce both stylistically informed and more abstract musical structures, and the user can direct the program to generate any musical output that can be expressed through a series of formalised constraints.

While the three pieces of software listed here are by no means exhaustive, I aim to express that there are a number of detailed approaches toward building and using computer-aided software. Even between programs that are built on similar paradigms there are differences that separate these programs in ways where one tool cannot simply supplant another. For example, Bach, OpenMusic and Patchwork (see Assayag et al. 1999) borrow or directly use data structures from Lisps. In Bach this manifests as the lisp-like-linked-lists while in Patchwork and OpenMusic the visual objects can be considered a visual representation of s-expressions, the building blocks of the underlying Lisp programming language.

Between such environments that leverage the same underlying data types and language features there are inherent similarities in the way that data is expressed, stored and communicated. However, there are fundamental differences in how that data is evaluated and operated on from both an architectural and user perspective. This has repercussions for how interactive each environment can be. Agostini and Ghisi explain this by comparing the family of Patchwork CAC environments (Patchwork, OpenMusic, PWGL) and Bach and how their implementation within Max affords increased interactivity within a composition workflow.

The difference between the two paradigms is crucial: if we assume that parameters are handled at the beginning of the process, a bottom-up process (like within the Patchwork paradigm) will ultimately be a non-real-time process, since parameter changes cannot immediately affect anything below them, unless some bottom-up operation is requested on some lower elements. Moreover,the Max paradigm, not having to depend on return values, easily allow [sic] for much more complexly structured patches: a single action can trigger multiple reactions in different operators (a function can call several other functions,one after another has returned). (Agostini & Ghisi., 2012, p. 374)

As another example, OM provides its users with the maquette system which can allow for complex forms of meta-control to be imposed on the acyclic graph of functions represented in a patch. The notion of a maquette is embedded into OM; rather than something that has to be built by the user from scratch. Bach has no such system in place by default, and a method for meta-control would have to be designed and created by the user. In addition to these differences, there are CAC environments and languages without graphical user interfaces, such as slippery chicken. Instead, they offer text-based interfaces where the expression and abstraction of complex ideas can become simpler and more manageable while giving up much of the visually interactive possibilities for creative exploration. Thus, the design of a program is based on a number of assumptions about how people should interact with it, as well as the ways those people might want to represent musical ideas and solve compositional problems. This results in a different set of affordances and thus, musical possibilities.

Many CAC programs exist, each offering different interfaces and following their own design principles in order to engender a range of approaches to composing with the aid of the computer. Even with their similarities, there may be deep-rooted differences (real-time, non-realtime, text-based, visual) that afford or preclude certain compositional workflows and possibilities. Despite this diversity, much existing CAC software focuses on representing music as symbolic data that is then rendered to a number of formats, sonic or otherwise. Some programs have functionality for working directly with sound itself, such as the more recent additions to the OpenMusic language (see Bresson et al. (2017)) but these are mostly tangential to the main features of the language or environment, which focus on data transformation, patterns and algorithmic procedures. Many existing programs also attempt to recreate the mental patterns and workflows entrenched in acoustic composition, namely notation and the concept of a “score”. IRCAM’s music representations team, certainly one of the most significant research institutions and responsible for much of the existing computer-aided composition software, states that their research “has a particular accent on the concept of ‘ecriture’” (Assayag, 1998, p. 6). This word only roughly translates to the English language, but can be expressed as the “union of instrumental score writing and musical thought” (Assayag, 1998, p. 6).

In this thesis I present my artistic practice as a novel and personalised form of computer-aided composition that does not focus on manipulating symbolic data or producing “scores” and instead uses content-aware programs to assist me in composing directly with digital sample-based material. In this practice I employ machine listening, machine learning and borrow from research found in Music Information Retrieval (MIR) to build programs that can analyse the content of digital audio samples. By doing this, the computer undertakes the role of a co-listener in the creative process and becomes an interactive partner that, in its own machine-like way, can audition materials and act as a sounding board for compositional processes orientated around the act of listening. Eduardo Reck Miranda’s view is that this type of relationship has come into the foreground of computer-aided composition more recently, in which composers aim to engage with the computer in an “exploratory fashion” in order to “generate and test musical ideas quickly” (Miranda, 2009, p. 130). He characterises the computer in this relationship between human and machine as “an active creative partner” (p. 130). For me this is entirely true — the computer becomes an expressive tool in which I can computationally encode listening tasks. This enables processes and capabilities such as:

- Organising sounds, forming taxonomies and discerning relationships between sounds in a collection based on their perceptual qualities.

- Aiding in processes that are too difficult to handle with manual and aural faculties, for example, “listening” to thousands of sounds quantitatively.

- Adaptively applying signal processing to digital samples based on their content, such as with the data generated by an audio descriptor. This could also extend toward synthesis approaches that are informed by the data computed through machine-listening.

The affordances of content-awareness in composition are discussed in [3. Content-Awareness] alongside other practitioners working in the same area. The incorporation of content-awareness into my compositional workflow is discussed to an extent in [2. Preoccupations], and in greater detail throughout each project.

Iterative Development and Bricolage Programming

An important part of this practice-based research is an iterative development cycle to make custom content-aware programs for composition. Computer musicians utilise a number of technologies, constructing complex composing toolkits and performance systems from an assemblage of software and hardware. These amalgamations might be built from parts that service specific roles or that enhance the functionality of other connected parts. Despite being mostly hardware-based, modular synthesis practice represents this relationship to technology well, in which extending expressive capabilities for composition and live performance is achieved by connecting new devices to one’s setup or by changing the connections between existing parts. The modularity of such an approach is a key feature of the way I work, albeit applied to the development of software that can be iteratively improved and designed to communicate with new custom software.

Prefabricated hardware and software tools offer a set of possibilities and behaviours to their users and it is not usually possible to modify the underlying structure of the parts themselves. It may also not be straightforward to design new tools that will communicate and be compatible with an existing set of technologies. By developing custom computer programs, as is the case with the practical element of this research, new tools for composition are designed from the ground up and the interoperability of those tools is a controllable aspect. Working at this level technologically affords a depth of customisation to my computer-aided practice that is difficult to reach with prefabricated programs or devices. However, developing my own software is not a structured process where a known goal is reached, and I frequently find myself having to build different parts of a program ad-hoc in the service of immediate creative interests. This firmly embeds my creative programming process as inseparable from the parts of my compositional workflow that deal directly with collecting, treating and organising sound. Actions that deal with sound materials are supported by content-aware programs and in some cases are entirely reliant on them, forging a workflow in which software enables those behaviours, which itself can spur on the creation of new software. This is the essential notion of iterated feedback in my compositional practice with content-aware programs.

In addition to this, there is sometimes a lack of existing software which I can use as a reference for design and implementation, or there may be unusual requirements for how I want a program to function that requires additional “hacking” to draw various software into a cohesive compositional workflow. For example, ReaCoMa uses a number of workarounds and communication protocols between different bits of software to bring the experience of working with it as close as possible to the native operation of the REAPER DAW. Another example is FTIS which makes it possible to use various other software in a single command-line tool through an “adapter” interface. In light of this notion of feedback, and my often working in uncharted development territory, it is important to highlight the differences in the way that development is undertaken in my artistic practice compared to wider conventional software development and how this is situated in my overall compositional workflow.

Conventional Software Development

In the model of established software engineering, a systematic approach is employed where “in some form, a specification has been formulated, and is implemented, evaluated and refined through defined phases” (Bergström & Blackwell, 2016, p. 192). Such development practices delineate specification and implementation explicitly, and these phases are usually followed such that development follows planning. Within software development convention, there are a number of methodologies that structure the undertaking of work. For example, “the waterfall model, the spiral model, agile development, etc. [19], each of which details stages of formulating requirements, designing, implementing, testing and maintaining a software system” (Bergström & Lotto, 2015, p. 27).

While rigour and maintainability is important to my creative programming practice, the notion that planning should precede implementation, or that these steps should exist formally at all is incompatible with my artistic ideals. For me the purpose of making software can be for exploring the problem itself rather than for reaching a well-defined solution. Furthermore, programming is a component of my creative and compositional process alongside listening to sound materials and experimenting with sound manipulation manually. As such, the point at which software enters this process is not fixed or necessarily predictable, which diminishes the feasibility of a structured design and implementation process.

Human-Computer Interaction (HCI) research has explored such issues regarding the role of computers, challenging the established HCI triad of “domain object”, “user” and “tool”, in which the user applies the tool to “mold the domain object in foreseeable ways according to a well-defined goal” (Bertelsen et al., 2009, p. 197). This “second-wave” view of HCI emphasises efficiency and the notion that a routine task can become an effortless procedure in which the user is insulated from their own technological biases and pitfalls. The relationship between a user, the tool and the application of that tool reflects the studies that interested HCI researchers in this wave, researching “work settings and interaction within well-established communities of practice” (Bødker, 2015, p. 24). “Third-wave” HCI challenges these preconceptions, shifting the focus of the computer from a tool to a material for expression (Maeda, 2005, p. 101). This perspective positions technology as a “form of empowerment for creative intellectual work” (Bertelsen et al., 2009, p. 197) and acknowledges the wide-ranging personal application of computers outside of employment or necessarily professional contexts. Most importantly, it recognises the malleability and “dynamic relation between the software, the user and object of interest” (Bertelsen et al., 2009, p. 197), a position that is more resonant with artistic practice and certainly with my own. As a material for expression, software never reaches a “complete state” and is embedded in a perpetual process of change void of strict production timelines and releases. Instead, we might view the computer more like an instrument — something that is coupled to artistic practice and that can be slowly modified, refreshed and changed as the shape of the practice changes too.

This approach to software development can be observed in practice, such as in Rodrigo Constanzo’s creative coding. Confetti is an ever-growing toolbox of Max for Live (M4L) sound processing modules demonstrating a range of interests encompassed under one “tool” that can be traced to specific works. Another pertinent example is Pierre Alexandre Tremblay’s series of “sandbox” programs. Each version contains improvements and bug fixes, while also implementing new functionality that enhances and challenges Tremblay’s improvisational practice according to a set of concerns and ideas that occupy him at the time.

My research incorporates computing as an expressive medium rather than as a fixed tool. Software outputs are included in the submission of this thesis and each of these is in a process of constant development and change. These software projects mostly began as collections of impromptu and extemporary Max patches and Python scripts that once creatively fruitful were iterated into fully-fledged programs. FTIS, for example, arose from experimentation with the scikit-learn Python package applied to sound collection analysis. This experimentation prompted the need for a framework in which these ideas could be explored more rapidly, with technical extensibility and the ability to carry forward a long-lasting approach to coding with that tool into the future. [5. Technical Implementation and Software] outlines these programs.

Bricolage Programming

As outlined above, traditional software development models do not necessarily capture the way that software is used and developed in the context of my creative practice. The concept of bricolage programming, however, is sensitive to the ad-hoc and “felt-out” development process of bespoke tools for composition. This concept was put forward initially by Turkle and Papert (1990, p. 136) where they summarise the bricolage programmer or bricoleur as follows:

The bricoleur resembles the painter who stands back between brushstrokes, looks at the canvas, and only after this contemplation, decides what to do next. For planners, mistakes are missteps; for bricoleurs they are the essence of a navigation by mid-course corrections. For planners, a program is an instrument for premeditated control; bricoleurs have goals, but set out to realize them in the spirit of a collaborative venture with the machine. For planners, getting a program to work is like “saying one’s piece”; for bricoleurs it is more like a conversation than a monologue.

In contrast to traditional methodologies for software development described in [2.1.2.1 Conventional Software Development], bricolage programming embraces error and deviation in the development process, viewing this as a fruitful, or perhaps even necessary part of developing software. Furthermore, the tangents that are explored as a result of this process are ways of exploring the problems the software seeks to solve in the first place. The flow of my programming practice resonates with this characterisation, and I have found that the challenges of developing software for composition can give rise to emergent musical outcomes or expose pathways toward finishing a work that would otherwise not have been taken if a rigid plan were followed. I purposefully avoid holding to such a plan, and try to not anticipate features for software that might be useful in the future. For me, this kind of technological speculation often leads to time being wasted and already nascent ideas to become obscured by a misdirection of efforts toward technical issues. Ultimately, developing software for me has to be situated amongst a set of musical, compositional and aesthetic concerns and speculating that I could do this if the software did that is often a dead end. Instead, testing small ideas out and slowly growing a program in tandem with compositional ideas tends to be a richer integration of computing into my compositional practice and generally leads to better artistic results. One criticism of this design philosophy is that there is always a degree of “coping” (Green, 2013, p. 107) with the intermittent state of the program and it can at times be difficult to reorientate between “soundful” practise and technical problem-solving. The investment of time put toward solving different compositional or technical problems was especially relevant to the compositional process in Stitch/Strata. The experience of balancing these two facets largely motivated my approach to composing Annealing Strategies.

Turkle and Papert’s description of the bricoleur emphasises the dialogical interaction between human and machine in bricolage programming. Human interaction with computers is commonly portrayed and understood as a unidirectional and instructional relationship in which explicitly expressed steps are executed by the computer. While computers are reliant on human instruction at some point, bricolage offers a perspective for how a computer can orient the relationship with a human toward one based on feedback. McClean and Wiggins (2010) have synthesised their own view of bricolage programming in the sphere of creative arts specifically. Their view is a good model for formalising the iterative development workflow that is at the heart of my technological approach which is discussed in the next section, [2.1.2.3 The Feedback Loop].

The Feedback Loop

IMAGE 2.2: Diagram from McClean and Wiggins (2010) depicting the bricolage programming feedback loop.

IMAGE 2.2 is McClean and Wiggins’ formalised process of bricolage programming, as a “creative feedback loop encompassing the written algorithm, its interpretation, and the programmer’s perception and reaction to its output or behaviour” (2010, p. 2). This loop defines four key stages: concept, algorithm, output and percept interspersed with italicised actions enacted by the human. They describe the start of this feedback loop as having “a half-formed concept, which only reaches internal consistency through the process of being expressed as an algorithm” (McClean & Wiggins, 2010, p. 2). They provide a theoretical example of someone modifying a program to follow an in-the-moment perception of a “tree-like structure” that emerges from first experimenting by superimposing curved lines (McClean & Wiggins, 2010, p.2).

For me, the concept node always begins from a point of auditioning sound materials. This process occurs manually at first, and is shortly followed by the involvement of the software to aid that listening process or to follow naive hunches about how those sounds might be organised or treated compositionally. By engaging with those materials aurally and in dialogue with content-aware programs, conceptual foundations for a piece are made. For example, after listening to segments of phonetics made by experimental vocal improvisers the concept for Stitch/Strata developed. The broader idea was that those segments could be concatenated and recombined to form new sound objects reminiscent of the source material while presenting a new inhuman quality. This central concept was the starting point from which a process of computational encoding began, followed by observation of those outputs. In IMAGE 2.3, a number of variants of a computer-generated phrase can be seen represented as files in the file system of my computer. Ultimately, this process led to an unanticipated change in the overall shape of the piece after numerous iterations through different technical approaches were explored.

IMAGE 2.3: A screenshot showing computer generated outputs of concatenated phonetic outputs. The letter of each file represents a different concatenation strategy, with multiple variations for each one.

At the output stage, outputs have been made by the computer which invokes a reflective process on them through observation and reaction. McClean and Wiggins posit that “the perception of output from an algorithm itself can be a creative act” (2010, p. 4). In this stage, the digital symbolic representation in the computer is converted to an analogue representation that is perceptual, such as lights emitted from a screen or, more pertinently, audio from loudspeakers. Engaging with these outputs is perhaps the single most important mechanism in my practice, by which compositional ideas are developed and iterated on, or the original concept is changed to encompass what is found through observation. Audio is the main output I respond to in this regard, and forms an element in a feedback loop between encoding, listening, observation and a return to encoding. In Annealing Strategies the bricolage feedback loop was formed by listening to the output of a generative system and then tweaking how the program worked in order to adjust further outputs. Indeed, each project has its own story that traces this feedback loop, whereby listening to sound materials stimulates the creation of problematised scenarios in which the computer can aid me.

I also engage with outputs from the computer that are not necessarily audibly perceptual. In some cases, the computer offers text-based outputs such as JavaScript Object Notation (JSON) (for example see CODE 2.1) containing analysis and statistical summaries produced from machine listening and machine learning.

{

"2543": [

"0ABB6CC72E4E11EEC26AE218461C4C3905FDF5B2_1.wav",

"F712577B6BBDCFF9E5D4B9B635DA1284A515B94F_1.wav",

"EA7B01F5304AEAF46BA8744BE52F5BFEB6CAC019_1.wav",

"93290986A82C872706E952B13B51000D6E523067_1.wav",

"9693C7A31477D0753B167287D3132C957F3F6366_1.wav",

"5358D21DA3D4F3C72FE3E23A3CAB9F46ACAAC346_1.wav",

"F5E8F0EEB38158DE8236E0827A2A4ECF4824D83A_1.wav",

"2E7DA5DD5FA50064C8BBA521CD7EB54BC7EBBF37_1.wav"

],

"2342": [

"PCSAnalytics.db-wal_235.wav",

"knowledgeC.db_21.wav",

"PCSAnalytics.db-wal_168.wav",

"psi.sqlite_9.wav",

"PCSAnalytics.db-wal_72.wav"

],

"1122": [

"3870112724rsegmnoittet-es.sqlite_831.wav",

"cache_24_4_2.wav",

"3870112724rsegmnoittet-es.sqlite_346.wav"

],

"554": [

"client.db_54.wav",

"client.db_35.wav",

"AddressBook.sqlitedb_0.wav",

"cache_72_3_36.wav",

"Default_5.0.ReaperThemeZip_82.wav",

"Default_4.0.ReaperThemeZip_57.wav"

],I can discover valuable information from such data that then informs further decision-making. For example, in both Reconstruction Error and Interferences different sets of data were produced by the computer using machine learning and machine listening in order to take a larger collection of samples and segregate individual samples into perceptually homogenous groups or clusters. I explored this clustering data aurally using small Max patches and computer-generated REAPER sessions as well as visually by reading the data. This informed my compositional decision making and the selection of audio samples and without the aid of the computer, I would not have been able to examine the original corpus in a structured way and on the basis of perceptual similarity between samples.

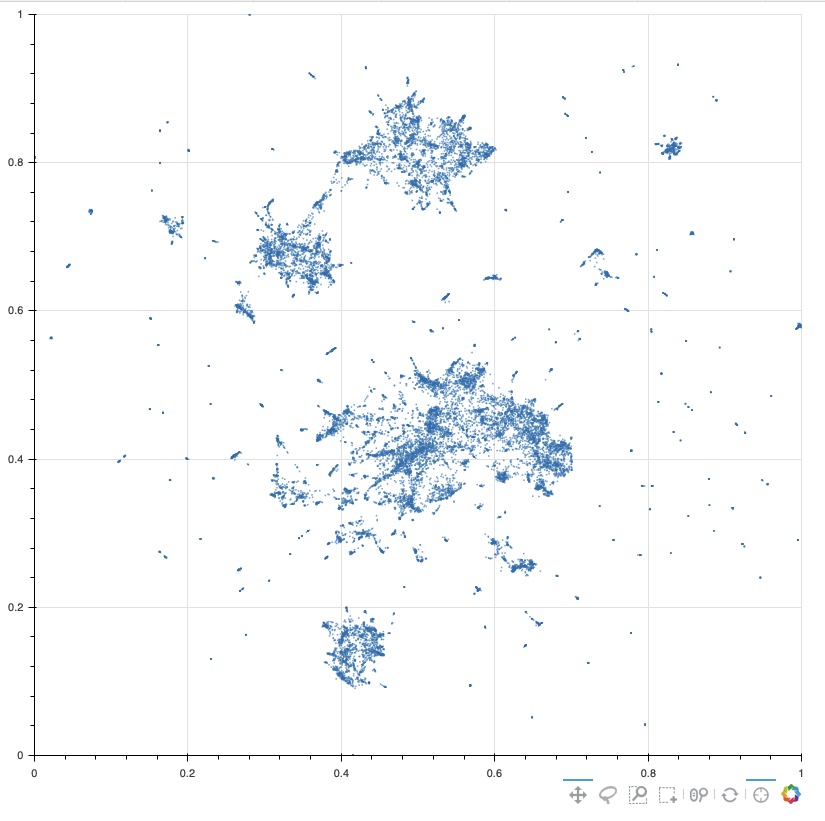

Visualisation of data is also a meaningful output from the computer. A visual representation of a corpus can be useful for viewing it topologically, as a group of items that form a shape consisting of local and global relationships. While this sort of data may be hard to act upon compositionally, it can be part of the overall feedback loop, leading to other forms of encoding and reaction. Visualisation and data-driven approaches were used heavily in both Reconstruction Error and Interferences. IMAGE 2.4 is a scatter plot representing a projection of individual corpus samples into two-dimensional space based on machine listening analysis.

IMAGE 2.4: Samples projected into a two-dimensional space using dimension reduction on mel-frequency cepstrum coefficients analysis. This data was used in the Reconstruction Error project.

Outputs from content-aware computer programs help me to clarify and hone my compositional intent which in turn shapes the music that is made through an iterative composition process. Some of these outputs are inherently musical or sonic at first while others are data-driven, structural, organisational and taxonomical in nature. Observing outputs such as these can prompt new actions from observation, forming a part of a feedback loop in my compositional workflow. The tightly woven nature of the software into the exploration of ideas situates the computer as a highly agentive entity and guides me to solutions for both the technical and creative problems I face. The idea that a concept can only reach “internal consistency” through this workflow is extremely important in understanding the purpose of working in this way. DEMO 2.1 shows a diagram where nodes represent the types of observations that I make and the actions I take as a result. Ultimately, these parts of the creative process can lead back into each other, resulting in a formative cycle for developing compositional ideas.

DEMO 2.1: A network of actions and outputs. Observing different outputs can lead to compositional decision-making or lead back to further computational exploration and new outputs being made.

Aesthetics

This section describes the aesthetic foundations of my practice, drawing attention to the types of sound materials I work with and my approach to their treatment and organisation using content-aware programs. Firstly, the nature of sample-based materials is discussed in the context of my work. Secondly, I outline the quality of the sounds I use and their “digital-ness” as an important aesthetic feature. Thirdly, I discuss the notion of horizontal and vertical taxonomies in sound and how this informs my intuitive and technical treatment of sounds. Lastly, some approaches to temporality and time are discussed.

Sample-Based Compositional Material

My practice is orientated around audio samples as the primary material for composition. For the computer to analyse and collect sounds, audio has to be stored in memory or on disk so that it can be analysed and compared as a corpus of sonic items. In this research, the term digital samples encompasses both audio data stored in memory, such as that gathered on-the-fly, as well as audio files stored on disk. For example, the content-aware system in Annealing Strategies generates and analyses the same audio in real-time. The analysis data affects how that system will run in the future. In Refracted Touch, pre-composed audio samples are selected and triggered in real-time by the performer, while the input signal is also collected into a buffer for content-aware granular synthesis and signal processing. Stitch/Strata, Reconstruction Error and Interferences use collections of audio files on disk and operate on these as compositional units to be divided and concatenated into new sound objects.

The sample-based aspect of my practice is important to acknowledge because it situates my compositional workflow around a process of searching within a space of stored possibilities rather than constructing those possibilities from a set of first principles or a priori goals.

Digital Material

The material I use is both sample-based and also presents a digital aesthetic that is non-referential and abstract. The sounds used in my work do not explicitly or intentionally allude to real-world sounds, such as those from an instrument or the environment. Within this sound world, I gather material using a number of techniques and from several sources, such as synthetic impulses, recorded electromagnetic interference, chaotic synthesis algorithms, segmented voice recordings or “moshed” data files. I have no fixed methods in my practice for finding materials and prefer for each creative project to utilise whatever tools are novel and challenging to me at the time. The binding factor between these sometimes disparate sources and techniques is the types of sounds that I tend to work with, which can be characterised by their harshness, broad spectral profiles and prominent noise-based quality.

In parallel with my interests to discover and then use noise-based, chaotic and spectrally complex sounds, a driving aesthetic goal is to coerce those sounds into fragile and delicate combinations that foreground intricate and buried morphologies within them. This positions noise-based sounds as a challenging material for me, which through the process of re-framing provokes me artistically. I find the tension between their raw forms and what they can become through treatment and careful curation a powerful factor in my incorporation of them into compositions.

Furthermore, noise appeals to me as a compositional material because the computer can discover structure and inner components within such material that would otherwise be unheard or imperceivable to a human. This is because computers are especially good at engaging in a listening behaviour that is detailed, atomic and predicated on a numerical understanding of sound that is alien to the human listening process. This tension was an aesthetic feature of the final two projects, Reconstruction Error and Interferences in which noise-based corpora were subjected to a variety of machine listening processes that attempted to create taxonomies and hierarchies of those sounds to inform my intuitive compositional decision making. From this process, I was able to extract a number of refined and highly focused sound materials from composition. In Annealing Strategies a content-aware program is tasked with discovering how 24 chaotically coupled synthesiser inputs contribute to its sonic output, as measured by a single loudness audio descriptor at the output. Through this process, it discovers a complex and concealed algorithmic connection between the parameters and the perception of the output, and as it performs this process in real-time this structures the piece itself.

This proclivity towards the inclusion and treatment of noise-based sounds stems from my own tastes in listening and from my appreciation of artists such as Autechre, Burial, Benjamin Thigpen, Mark Fell and Bernard Parmegiani. Pieces that exemplify a similar exploration and interest of noise include the “rework” of Massive Attack’s Paradise Circus (2011) by Burial, which, much like his whole discography, employs layers of noise and over-reverberated high frequencies while maintaining a sense of fragility in those textures and without overpowering the mix.

VIDEO 2.1: Paradise Circus (2011) – Massive Attack vs Burial

Select moments of Benjamin Thigpen’s work explicate the intricacies of relatively chaotic sounds. The opening passage of Malfunction30931 (2010) from Divide By Zero demonstrates this, in which several chaotic sounds are layered on top of one another to create a static and initially directionless texture. Each layer occupies its own region of the frequency spectrum and within that exhibits its own morphology and dynamic profile, making for a listening experience in which each timbral layer can be segregated as a gestalt, while remaining part of the overall machine-like drone. A similar approach is taken in Interferences. For this project, I tried to create expansive formal structures by superimposing static materials with dense and complementary morphologies. The computer aids me in discovering and selecting samples for these superimpositions.

VIDEO 2.2: Malfunction30931 (2010) – Benjamin Thigpen

De Natura Sonorum: Matières Induites (1974) by Bernard Parmegiani exhibits similar properties to Malfunction30931, in that in both works, divisions of the spectrum are occupied by narrow and controlled streams of noise. Each layer is perceptually separable from the composite texture while remaining cohesive with that higher-level sound object. This highlights the intricacies of those noise elements and the interplay between them. In composing Reconstruction Error, some of these types of behaviours were constructed by performing signal decompositions and using that to construct “streams” within a larger sound-object.

VIDEO 2.3: De Natura Sonorum: Matieres Induites (1974) – Bernard Parmegiani

Microsound

Microsound as a movement influences my work, particularly in the way that I compose with noise-based and digital materials. Specific artists include Kim Cascone, Richard Chartier, Bernhard Günter, Olivier Pasquet, Alva Noto and Ryoji Ikeda. Significant parts of my practice borrow from the stylistic paradigm established by these artists, who encompass several dispersed “post-digital” genres, “from academic computer music to post-industrial noise to experimental ambient and post-techno” (Cascone, n.d.). A significant influence from this is the way that artists within this movement subvert the rigid, structured and reproducible characteristics of digitally manipulated sounds and transform such source materials into organic, evolving and semi-structured textures. Phil Thomson (2004) refers to this as “the new microsound” which is “beatless and focuses on textures often assembled from microsonic elements, again often culled from computer malfunctions or from the creative misuse of technology” (p. 212). Specific artists in this thread of microsound include autopoiesis, Taylor Deupree and Pimmon.

At times my work operates within a similar paradigm and restructures digital noise-based source material into sounds that subvert their inherent qualities of “digital-ness” and rigidity. One way that I achieve this is by concatenating short segments of deconstructed source material — rapidly and regularly enough that it is perceived to be a repetitive structure. However, the temporal closeness of these concatenations teeters on the edge of forming an entirely textural musical surface, creating an ambiguity between their mechanical organisation and their organic nature. By layering and superimposing several instances of such a process I can weave between these two behaviours, where single exposed instances might be perceived as “computerised” and rigid, and multiple melded layers as complex and shifting textures.

This technique is used in —isn, from Reconstruction Error and G090G10564620B7Q from Interferences. Other techniques include first positioning elements on a quantised grid and then randomising an offset to the positions of samples to skew them along that time structure. This results in the presence of a stable and repetitive time structure that is to some degree detectable by the listener but is concealed and obfuscated by the deviations. Furthermore, digital signal processing techniques help me to re-frame noise-based sounds in organic ways. These include signal decomposition, filtering and compression which allows me to carve out specific spectral and dynamic properties from composite sounds and recombine them as I see fit, at times with computer assistance and automated machine listening procedures.

Time

My approach to time and the organisation of material in my practice is a hybrid between my pre-existing aesthetic preferences and the possibilities discovered and presented by content-aware programs in the iterative development cycle. While I often have strong tendencies for the placement of material in a musical context, I often rely on the computer to suggest or offer ways that materials can be grouped or structured. As a result, my approach to time is a synthesis of my intuition and computer-generated outputs. Firstly, I will outline some of my own aesthetic ideas and, secondly, I will consider the ways that technology informs my treatment of form, structure and time.

Form and Hierarchy

The basis for formal thinking in my work is underpinned by a hierarchical approach to different temporal scales. James Tenney’s theory of form describes this hierarchical thinking, conceiving the organisation of musical objects in time as layered components that increase in granularity toward those elements which are indivisible. At the highest level is the “piece”, which is made up of subsequent “sequences” of “clangs”. The “clangs” are themselves made from the next lowest level, the “elements”, which are indivisible in nature and which are the primitive building blocks of the work.

Whether a given sound or sound-configuration is to be considered an element, a clang, or a sequence depends on many variable factors — both objective and subjective. (Tenney, 2014, p. 152)

This model reflects the types of divisions I make formally with sound materials, which has wider implications on the technical treatment of different time scales. Applying this model to my workflow, the elemental components are audio samples including sound file samples and digital samples output from sound synthesis or stored in computer memory. At the next hierarchical level, Tenney describes the clang as both a perceptual “aural gestalt” and as presenting unique structural changes in respect to both tonality and rhythm. Arranging sounds by their pitch function is rarely a part of my engagement with compositional material and only surfaces as a by-product of a sound’s textural characteristics. However, rhythm is an important feature to me, and can determine the perception of groups of elements. As such, the perceptual characteristics of a clang are a factor of the rhythmic homogeneity of juxtaposed sound elements and the textural similarity between those elements. A clang could also be described as a gesture, which alone would carry little high-level formal information but could be the basis from which a larger musical behaviour or sequence can be established. Collections of gestures into sequences facilitate high-level percepts such as shape and qualities of development, transition and directionality.

An example of this can be seen from 3:31 to 4:04 in “segmenoittet”, a piece that is part of the Reconstruction Error project. This passage can be heard in AUDIO 2.2. Each of the gestures in this passage is composed of elemental short impulses, concatenated into small densely packed groups of material. The distinction between these groups is exposed at the start of this passage, in which they are interspersed sporadically. Some of these gestural groups are longer than each other, and as this musical behaviour continues, increasingly lengthy concatenations interject. They eventually fuse with the shorter gestures and blend into each other, forming an unbroken sequence. This gives rise to a higher-level formal structure, characterised by the convergence of short impulses in distinct groups toward a continual stream of impulses that belong to a unified coherent texture. The piece is further structured around several of these types of sequence events.

- List of referenced time codes

Tenney states that determining when a piece emerges from many sequences is not strictly defined and “depends on the piece itself” (Tenney, 2014, p. 156). I will discuss broadly how I determine these broader high-level constraints shortly and how the computer plays a role in defining these in [2.2.3.3 Perceiving Technology in Form].

The notion of hierarchical form from Tenney has connections to the formal thinking which underpins algorithmic composition practices such as those described in [2.1.1 Computer-Aided Composition and what I term “unsupervised” approaches in [3.5 Content-Awareness and Augmentative Practice]. Curtis Roads (2012) reflects on this ambition toward the construction of hierarchical form generatively and outlines granular synthesis techniques that can be used to organise grains of sound into “multiscale” musical structures. The emphasis in this “multiscale” approach is to create a sense of coherency between low-level and high-level organisational principles. Roads acknowledges that this is challenging and there is a tendency for such approaches to fail the composer aesthetically.

This is one of the great unsolved problems in algorithmic composition. The issue is not merely a question of scale, i.e., of creating larger sound objects out of grains. It is a question of creating coherent multiscale behaviour extending all the way to the meso and macro time scales. Multiscale behaviour means that long-term high-level forces are as powerful as short-term low-level processes. This is why simplistic bottom-up strategies of ‘emergent self-organization’ tend to fall short. (Roads, 2012, p. 23)

In response to this issue, Roads puts forward the notion of “economy of selection”. This idea supports the composer’s intuitive input to the selection of “perceptually and aesthetically optimal or salient choices from a vast desert of unremarkable possibilities” (Roads, 2012, p. 28). Composers who aim to generate all hierarchical levels of a piece algorithmically may find this approach incompatible with their sensibility to delegate much of the compositional decision making to the computer, but Roads puts forward the counterargument that “even in generative composition, the algorithms are chosen according to subjective preferences” (Roads, 2012, p. 28). Thus the notion of computational agency in computer-aided composition is complex and entangled with our own aesthetic aims and goals. I believe this can be framed around a spectrum of autonomy, at one end characterising composers who aim to off-load as much as possible, if not all compositional decision making to the computer. At the other end, are those composers who sparingly employ the computer and retain a majority of control over the work. This is discussed further in [3.5.1 A Spectrum of Autonomy].

This tension between total control and total off-loading is a tension that I have experienced in regards to form, having at one point in my practice the desire for the computer to construct all formal levels of a piece algorithmically. However, the strictness of this approach was incompatible with my aesthetic preferences and my desire to interfere with those processes. There is still an aspect of my approach that is concerned with how an algorithm can proliferate and generate formal constructs, but I have found that designing algorithms that can adequately systematise high-level form is a problematic endeavour for me personally. As a result, I have relied increasingly on infusing my intuition into the compositional process by curating the output of the content-aware programs and having this as the basis upon which formal structures are determined. This specific issue will be discussed next in [2.2.3.2 Issues with High-Level Form].

This development can be traced chronologically throughout the projects, in which failed experiments to generate multiscale form algorithmically in Stitch/Strata motivated me to engage in a more balanced approach to incorporating my intuition into selecting and curating computer outputs. This also led to a paradigmatic shift in technology and compositional materials for the next work Annealing Strategies, in order to address this frustrating experience.

Issues with High-Level Form

Conceiving form hierarchically is a product of my tendency to problematise compositional concerns with the computer (by asking the question “how might the computer form a sequence of these elements according to a procedure”*) and as a requisite model for conceiving of time technically with content-aware programs. For example, I would find it useless to use an arbitrary pattern to determine from the “top-down” the ordering and length of different high-level formal sections first. I prefer to work from the elemental units initially. Thus, I cannot organise clangs without first developing a system for selecting and organising the elemental components of those units. This gives rise to a “bottom-up” process that begins by structuring audio samples and materials with aid of the computer, without conceiving in detail the high-level structures that will govern them. I achieve this using several techniques and approaches, some examples of which include clustering to draw together certain “classes” of sounds from within a collection, or descriptor-driven concatenative synthesis to arrange materials by their perception in time. While engaging with sound materials first does not exclude one from approaching formal concerns from the “top-down”, the notion of working from an arbitrary structure downwards makes no sense to me and how I engage with sound materials.

In the early stage of my compositional workflow, the role of the computer is significant and is situated in a rapid feedback loop between the production of low-level structures and my observation of these outputs. From this process, the notion of higher-level formal structures such as sequences and the entire piece, is “suggested” to me and the computer’s role in structuring materials gradually diminishes. The feedback loop is then no longer as rapid and the computer might be used sparingly from this point onward to regenerate or modify something that was created through complex technical means. At this point, I would usually move into a software environment such as REAPER or Max where collections of generated material can be perused at will, and experiment with their organisation manually and programmatically.

There are several reasons why at this point the computer becomes less responsible for the organisation of high-level form. For me, encoding high-level structures is more difficult than encoding low-level structures due to the complexity of systematising the features of high-level form that I find valuable and aesthetically pleasing. While micro- and meso-scale structures are naturally shorter and can be dealt with almost in isolation without considering their long term consequence musically, high-level structures that are composed of many low-level structures are relational in both memory and perception of listening. As such, the kind of creative work involved in making an algorithm or system to govern low-level structure is not transferable to a high-level structure and pursuing that often leads to an infinite regress of attempting to model complex decisions that govern my high-level formal thinking. Spending time in this is often tedious and unfruitful compositionally, and so for me the computer has a more effective role in suggesting high-level formal thinking, rather than specifically generating that material for me. What I am referring to is the way that a content-aware program can structure listening and engagement with sonic materials at the micro-level which subsequently informs how those materials and groups of materials are then structured at a high level intuitively.

As such, formal thinking in composition for me begins with a rapid and tight feedback loop between observation, encoding and the iteration of the original concept. As material is generated from this process the computer’s role becomes less of a cognitive resource and I rely more on intuition and less on direct use of the computer. I will discuss specific moments where I become aware of the notion of structured listening and how it influenced my practice, particularly in Reconstruction Error and Interferences.

Perceiving Technology In Form

Another formal consideration I make is the extent to which the computer’s decision-making processes are perceptible in the surface and the structure of the music. A pertinent example to draw from is glitch and noise music that overtly foregrounds the characteristics of computers and technology in both the material of the work and the structure of those materials in time. For example, Yasunao Tone’s Solo for Wounded Part II (1996) foregrounds the behaviours of the erroneous CD playback and the clicking of the physical mechanisms that generate glitch artefacts. These are defining features of the work and the rhythmic cuts between different digital materials are significant in establishing micro- and meso-scale formal structures.

Formal thinking in my practice is guided by similar efforts to embrace the underlying technicity which produces the music. For example, in several pieces in Reconstruction Error, localised rhythmic structures are based on the repetitive concatenation of whole sound files without any silence between them. Thus, the lengths of these files determine the density of the repetitions made by this procedure. Organising the samples in this way results in a digital sound world in which the artificial nature of the sound’s organisation is unmitigated. Furthermore, the technicity of this approach is evident in the arrangement of the material, forming a connection between what is heard and the mechanisms of its making. As the composer, I am privy to the relationship between these two things. Whether or not this relationship is perceptible from the perspective of a listener other than myself, is something that I consider when making compositional choices or when I reflect on the role of the computer and its contribution to musical form.

Gérard Grisey (1987) describes the perception of processes as a tension between their operational and perceptual value. To distinguish between these two ideas, he uses Karlheinz Stockhausen’s Gruppen (1963) and György Ligeti’s Lontano (1969) as examples where intensely designed and algorithmically derived musical structures are not necessarily perceivable. His main thrust is that processes and systems for formalising time might have little or no perceptual value, despite requiring rigorous work and planning to produce. To illustrate his point he describes a theoretical listener who can perceive retrogradable and non-retrogradable rhythms. This listener is characterised in mocking terms to make his point, that the sophistication of a process does not necessarily increase its perceptual value for a listener.

Again, such a distinction, whatever its operational value, has no perceptible value. It shows the level of contempt for or misunderstanding of perception our elders had attained. What a utopia this spatial and static version of time was, a veritable straight line at the center of which the listener sits implicitly, possessing not only a memory but also a prescience that allows him to apprehend the symmetrical axis the moment it appears! Unless, perhaps, our superman were gifted with a memory that enabled him to reconstruct the entirety of the durations so that he could, a posteriori, classify them as symmetrical or not!… (Grisey, 1987, p. 242)

In my compositions, micro- and meso-level structures are often derived through systematic means, in other words, through procedures with high operational value. By working with content-aware programs, such systems are built on data produced with machine-listening techniques and by audio descriptors. Working like this helps to create a connection between operation and perception almost implicitly. The purpose of tying these two together is not about improving an external listeners experience of the work or having them derive value through analytical listening; rather, it creates a frame around the dialogue between me and the computer — situating both systematic and intuitive decision making in the perceptual qualities of the material I work with.

For example, the first gesture (see AUDIO 1) of Stitch/Strata is made by concatenating samples based on analysis of their loudness and spectral centroid with audio descriptors. At the start and end of this first gesture, samples with low centroid and loudness are selected, and in the middle samples with high centroid and loudness are chosen. By interpolating between these three points over time, a parabolic gesture that rises and then falls in volume and brightness is produced. A listener might be completely unaware of the technical procedure that created this process but one can still grasp the shape of the musical gesture in terms of how its perceptual qualities change over time. In this case, the computer has helped me to construct this gesture without me having to arrange those samples manually. Instead, I can communicate the perceptual shape of the gesture numerically and the computer resolves those details automatically. This allows me to produce that desired musical effect more successfully and in dialogue with the computer. Whether or not the listener can derive the technical origins through listening alone is irrelevant to me.

Another example can be found in the material selection process for —isn. in Reconstruction Error. The samples used in this work are a small subset from a larger corpus. The computer derives this subset automatically using clustering, a process that groups together data from a set based on their similarity. Clustering in this case is calculated on mel-frequency cepstral coefficients (MFCCs), with the intention that items with similar spectra will be grouped into individual clusters. As such, this initial subset derived from the overall corpus is a relatively homogenous group of impulse-based samples with some variation between the items. This group of samples is clustered further to derive clusters containing fewer items that are increasingly similar and homogenous as a collection.

Each of the samples within the smallest clusters is concatenated together to form a sequence of rapid impulses. While each impulse alone only presents subtle differences, concatenating them into streams magnifies their inherent quality and builds a new perceptual unit of sound. When those groups of impulses are superimposed, this creates multiple audio streams or gestalts that feature phasing and interference between them. By additively introducing these streams, a high-level parabolic formal structure was created and the psychoacoustic repercussions of this process emerged as a structural feature of the piece. This was not designed beforehand; rather, arose as a consequence of technical experimentation with clustering techniques.

Further to this, the limitations of operational procedures can affect the boundaries of high-level structures. The end of —isn. was determined when the meta-class materials were exhausted and all of them had been superimposed. Thus, the piece represents an exploration of a technologically constrained set of possibilities that, once explored, have no further appeal to me. There was the possibility of me finding more material to extend the duration of the piece and to compose new sections of music, however, working like this is essentially unbounded. I want there to be limitations enforced by the computer and this gives me a set of constraints to work against. The notion that the computer somehow constrains how I compose is one that I only came to realise through this PhD research which is documented in the development of the projects. As such, the limit of operational and technical aspects imposes formal limits on the music.

The perception of technology is an important principle in how I compose, but not necessarily in how the works themselves should or can be perceived by a listener. There are instances where I have used a systematic approach to organising materials and the perception is closely tied to the nature of the system itself. At other times these two things are not perceivable or are perhaps too complex to derive from listening alone and the underlying technical control impossible to garner without observing the code or program directly. Ultimately, using the computer is about being able to discover formal relationships (as was the case with —isn) or to articulate certain structures in terms of their perception and to have the computer render those results automatically. This engenders the computer as a creative interface to those ideas and allows me to take them from a nascent state to a more developed and sophisticated level.