Content-Awareness in My Sample-Based Practice

This section provides an overview of content-awareness in relation to my compositional practice. To preface this, I outline conceptual and pragmatic areas of machine listening and machine learning, two subject areas that are fundamental to the design of my own content-aware programs. I then provide a technology review by discussing other practices, software and projects where machine listening and content-awareness is pivotal. I conclude this section by situating technology within my practice as “augmentative”, in comparison to “semi-supervised” and “unsupervised” approaches.

Machine Listening

Machine listening is a field of study that uses signal processing, machine learning and audio descriptors in order to enable computers to “listen” to sound. The model of listening constructed from these technologies may endeavour to replicate the way that humans interpret sounds, or it could be based on creating a computational system that is nothing like a human, and produces results quite different to the way a human understands sound.

Machine listening plays an important role in a variety of applications, such as automatic sound recognition (answering the question: what sound is that?), creating a condensed summary of a sound, also known as “fingerprinting” and automatic organisation and classification of sounds. A comprehensive overview of machine-listening applications can be found in Wang (2010). In my practice, I leverage machine listening for automatically deriving collections of sounds from corpora of sample-based materials, performing descriptor-driven concatenative synthesis and for building software that can respond in real-time to audio input. These capabilities aid me in composition by enhancing manual processes such as sample selection and arrangement. This approach was pivotal in Stitch/Strata, Reconstruction Error and Interferences. I also harness machine listening to shape generative processes where materials are constructed through synthesis, such as in Annealing Strategies. Finally, I use machine-listening in real-time systems where the interaction between an instrumentalist and a listening machine’s behaviour structures the music, for example, in Refracted Touch.

Machine Listening and Compositional Thinking

Unlike human listening, machine listening is not embodied and unless specifically designed to, has no implicit life experience, memory or framework for interpreting sound. Humans, however, are apt at understanding the context of a heard sound by relating it to their lived experience and embodied knowledge about how different sounds can relate. A human listening to a previously unheard sound might be able to quickly discern its source, or project how it relates to another memorised sound, or imagine several uses within composition. Although machines do not listen in this relational and context-driven manner without specifically being programmed to do so, they have the benefit of “listening” rapidly and can create a numerical type of response to sound. They are also more capable than humans at listening to large collections of sounds as a whole, and can “hold in memory” representations of many different sounds simultaneously.

While machine listening is often used to humanise machines — such as with speech recognition to enable humans to communicate more naturally with them — the harsh and digital character of machine listening can be used to exploit an understanding of sounds that are outside of what a human would normally do. My practice embraces this quality as an enhancement to my own listening. As an example to illustrate this, I often use automatic audio segmentation to segment collections of sounds into smaller units of sound that I can then compose with. This process rarely produces results that align exactly with how I would perform this task manually. For example, the starts and ends of the automatically produced segments may not agree with my perception of salient changes in a sound. Also, the segments may be too granular or not granular enough, and may not capture changes within an appropriate time scale. To ameliorate this, I have to engage in a trial and error process with segmentation algorithms by adjusting their parameters, observing the result of these changes and deciding if it is satisfactory. After some deliberation, the outcome of the automatic segmentation may be similar to that produced by my own manual segmentation, or it could diverge in a way that is unintended but still desirable. Furthermore, the nature of the computer’s segmentation can have a significant influence on how I incorporate those materials at a later stage of a work, or it may affect how they are processed or operated on in the future. I discuss these types of concerns and how I work through them in [4.4.2.2 Segmentation] and [4.5.2.2.1 Segmentation].

This process of arbitration between my preconceived aims and that which emerges from machine listening opens up the compositional process to the inflexion of the computer. I encounter this type of negotiation with machine listening in my practice; the computer mediates my engagement with sound materials, shaping my familiarisation and exploration of sound materials. From this, I can initiate the development of schemes for those samples use in composition. Furthermore, this approach allows me to test compositional hypotheses, conceived abstractly as machine listening tasks. The results of these tasks can inform future modifications to underlying machine listening implementations, or can prompt reflection on the sound materials that I was concerned with in the first place. Thus, the technology interacts with the compositional thinking through this methodology.

Key Technologies of Machine Listening

There are three key areas of machine listening technology that I work with in my practice. While each of these components has a defined role, their design and implementation are entangled and in many cases they perform calculations and use the results produced by each other. This section will describe these three technologies: Audio Descriptors, Audio Segmentation and Signal Decomposition.

Audio Descriptors

Audio descriptors are values or labels that represent characteristics of a sound (or features). Audio descriptors can be computed automatically with machine listening or manually assigned by a human. Audio descriptors can be used to describe a perceptual quality of a sound such as its “loudness” or “brightness” or may represent high-level semantic information such as the genre or when a sound file stored on a computer disk was produced. Audio descriptors are used for both technical applications and by creative coders in musical practice. Examples of artists who use audio descriptors include Andrea Valle, Alex Harker, Alice Eldridge, Owen Green or Diemo Schwarz.

In my practice, I use audio descriptors to describe and differentiate digital samples based on timbral, textural or morphological characteristics. There are many descriptors available and are documented in both Herrera et al. (1999), Malt and Jourdan (2008) and Peeters (2004). However, I only use a small subset of the descriptors described in such literature. Curating and selecting descriptors is a part of my compositional process, and by selecting certain descriptors I guide the computer to listen in a way that is sympathetic to my aesthetic preferences. Descriptors that I often find useful include spectral moments (centroid, spread, skewness, kurtosis), other statistics calculated from spectra (rolloff, flatness, crest), mel-frequency cepstrum coefficients (MFCC), and statistical calculations applied to this data (mean, standard deviation, median, percentiles).

As part of my understanding and application of audio descriptors I consider them in three categories; low, mid or high-level. These categories relate to an increasing level of abstraction from the sound that is analysed. This classification system is borrowed in part from Ghisi (2017, p. 26) who remarks that such a subdivision is not necessarily agreed upon. As such, I propose this classification as a strategy for understanding the different levels that audio descriptors occupy in my practice and what that means for their creative affordances, rather than as some objectively valuable classification system. The most economical way to outline this system is to use a real-world example. For this, an onset detection algorithm designed to extract onsets from the amplitude of a signal will be the example. This onset detector has a sensitivity control modified with a single threshold specified in decibels which, when exceeded by the signal, triggers an onset. For this onset-detection to work, several stages of analysis and audio descriptors form part of a “processing pipeline” where data is transformed and passed between those stages.

Low-level

Firstly, the digital representation of the source signal is analysed sample-by-sample to derive the amplitude by rectifying the signal and converting the normalised value from linear amplitude to decibels. Calculating these amplitudes from the raw samples is a low-level descriptor, and other processes that operate frame-by-frame or sample-by-sample fall under this category. Low-level descriptors often match the dimensionality of the analysed sound. For this onset-detection algorithm, a new point of data is produced for each sample. As such, low-level descriptors can be useful for creative applications where the derived values are immediately used. For example, these amplitude values could be mapped to a parameter of a sound-producing module like distortion or compression.

Mid-level

Secondly, after deriving the amplitude values, a threshold is applied to determine at what points in time that threshold is exceeded. It could also be recorded how far the threshold is surpassed to determine the intensity of an onset. This new set of data is increasingly abstract from the original source sound and tends to be a summation of a feature of that signal. In this case, this descriptor describes when a certain level of change has occurred which is not an inherent characteristic of the signal itself. In this particular onset-detection example, the onsets could be used in real-time to trigger the playback of other sounds or to activate pre-composed events. Furthermore, one might use these onsets to segment a signal offline, based on the assumption that meaningful separations of the source material are to be found in the differences in amplitude.

High-level

Lastly, with a collection of onset times and intensities, the relationship between them could be analysed. Doing so might engender additional semantic information about the signal or provide a singular summary of the entire source. As a hypothetical example, there may be a pattern between the onset times that can be derived through analysis which could be useful for describing how perceptually rhythmic the source sound is. The concept of a sound being more or less rhythmic might be hard to formalise computationally. However, other similar high-level descriptors exist such as danceability. Such a hypothetical “rhythmicity” high-level descriptor departs from analysing the inner structural elements of the signal and moves toward a kind of musical listening.

As my practice has developed over this research project, I have increasingly relied on using high-level descriptors, while earlier in my practice low-level descriptors were my primary tool for exploring with machine listening. This trajectory was not intentional or predetermined, rather, it came about from developing my creative coding skills and experimenting with increasingly complex technologies in the service of changing sets of questions and interests. This is an important aspect of my practice that will be traced in each project section more thoroughly.

Issues with Audio Descriptors

Given that high-level descriptors often use data produced by low-level or mid-level descriptors, a number of issues can arise if these dependent audio descriptors are poorly selected or managed. This has consequences when compositional choices are made from this information or if the computer is working further with that data. As such, the curation, implementation and application of descriptors is an entangled process that requires reflection and thought within the context of their application. I consider the application of audio descriptors in my practice as important as orchestration is to a composer of acoustic music. Grappling with these types of issues became increasingly prevalent in the final projects of this research and my attempt to make these types of issues more manageable can be seen in the development of FTIS.

Implementations

Many software tools can be used to calculate audio descriptors, especially for creative coding and composition. For Max, there is Alex Harker’s’ descriptors~ object, the Zsa.descriptors, the MuBu/PiPo/ircamdescriptor~ ecosystem and several of the Fluid Decomposition Toolbox (see Tremblay et al., 2019) objects. Among text-based languages such as Python and C++, there are packages and projects including: Essentia, libxtract, Surfboard and aubio. It is worth noting that even for different implementations of the same base algorithm there are disagreements in design, and “flavours” of algorithms that better suit the biases and preferences of the creator. For example, a discussion on the FluCoMa discourse highlights how seemingly subtle engineering choices such as liftering on MFCCs can drastically alter the results of an algorithm that attempts to match two samples by proximity in euclidean space. VIDEO 3.1 shows Rodrigo Constanzo demonstrates such differences in a Max patch.

VIDEO 3.1: FluCoMa vs Orchidea MFCC matching comparison.

Audio Segmentation

According to Ajmera, McCowan and Bourlard (2004), audio segmentation is “the task of segmenting a continuous audio stream in terms of acoustically homogenous regions, where the rule of homogeneity depends on the task”. Homogeneity is a broad term, and can refer to a perceptual grouping process but is not necessarily the case. Segmentation can be performed manually or automatically. In manual segmentation, divisions are created by a human based on their intuition or listening. Automatic segmentation is performed by a computer by analysing the structure of a digital signal, allowing the process to be applied to collections of samples programmatically.

Automatic segmentation is an essential component of machine listening in my compositional practice and supports a creative workflow in which granular compositional units can be collected without having to exhaustively produce them from the ground up. This is performed by taking several whole sound files, often collected through additional automatic procedures, and designing an automatic segmentation algorithm to separate these into smaller parts. I can then employ technologies such as audio descriptors, statistical analysis, dimension reduction and clustering to organise these collections into groups. Such groups might be predicated on perceptual similarity, or to satisfy constraint-based procedures (such as in descriptor-driven concatenative synthesis). The results of these processes inspire and guide many facets of the compositional process, including sample selection and my grouping of material into units or objects. This way of working was particularly prevalent in Stitch/Strata, Reconstruction Error and Interferences, and I discuss the challenges in selecting appropriate segmentation tools for dividing large corpora in these sections.

My understanding and engagement with segmentation techniques developed throughout this research, particularly as my demands for more sophisticated automatic algorithms increased. To this end, I developed ReaCoMa, a set of ReaScripts for REAPER that among other things, facilitate rapid and interactive automatic segmentation in a DAW. This package utilises the command line tools from the Fluid Decomposition Toolbox to perform the actual segmentation, allowing a variety of different types of segmentation algorithms within a single composition environment.

Onset Detection

While segmentation is not strictly the same as onset detection, there is a significant overlap between these two tasks. Fundamentally, both segmentation and onset detection are based on detecting events based on meaningful points of difference in a signal. I used onset detection in Refracted Touch as a way of triggering events that would progress through pre-composed states. Works that utilise onset detection and have influenced mine include Rodrigo Constanzo’s Kaizo Snare (2019), Martin Parker’s gruntCount (2013) and Christos Michalakos’ Augmented Drum.

Implementations

In my practice and particularly in early projects, I have tended towards using the “dynamic split tool” that is part of REAPER. This segmentation tool is highly configurable and I have used it to extract smaller samples from recordings, such as in Stitch/Strata for isolating phonetics sounds. With the release of the Fluid Decomposition Toolbox, this suite of segmentation algorithms became a large source of experimentation for me and provided several different models that each possess unique strengths and weaknesses. These tools have been creatively stimulating, by offering segmentation algorithms that are better suited to materials where amplitude-based slicing is not suitable. This situates the FluCoMa tools in my practice well, because I am mostly concerned with textural materials that do not respond well to amplitude-based segmentation.

Signal Decomposition

Signal decomposition is the “extraction and separation of signal components from composite signals, which should preferably be related to semantic units” (Jens-Rainer Ohm, 2004, p. 317). In music audio and music composition, signal decomposition can be used to “unmix” composite digital audio, such as a recording containing several instruments. It can also be used with monophonic sounds, where the superimposed internal layers in a sound can be isolated from each other.

Signal decomposition techniques are often modelled on human listening and may attempt to reproduce computationally the types of filtering and separation that humans can perform. Harmonic-percussive source separation (HPSS) (see Fitzgerald (2010)), for example, attempts to isolate harmonic and percussive components from within a single sound. This can be useful for reducing the noisier components of a signal, or for shaping a harmonic aspect of a sound without affecting the noise. DEMO 3.1 provides an interactive example of this, where you can change the balance between harmonic and percussive components of a sample used in Reconstruction Error.

Other techniques such as non-negative matrix factorisation (NMF) (see Fitzgerald et al. (2005)) may not be designed with specific decomposition tasks in mind, and instead, provide a generalised algorithm for extracting “components” from a source. Such techniques could form the basis for a specific task, such as separating distinct instrumental parts from each other in an ensemble recording, or might be used more speculatively to decompose sounds into arbitrary parts.

Ultimately, there are a variety of signal decomposition approaches and techniques that can support different creative intentions. Decomposition tasks can be approached with a set of prior goals and aims, and their success can be improved by working with an algorithm, learning how it operates and tuning its parameters. On the other hand, an algorithm might support a speculative computer-led process where creative value is discovered in the decomposition process and its outcomes. In such a scenario, the emphasis is on observing what emerges from the decomposition process, rather than getting closer to a solution that satisfies pre-determined goals.

As my practice has developed, signal decomposition has become a prominent part of my approach to composing with samples. In later projects, particularly Reconstruction Error and Interferences, decomposing sounds was used widely to process samples for creative effect, as well as for enhancing the capabilities of machine listening by extracting specific characteristics from a sound and operating on them. Decomposition also has a place in my practice for the speculative deconstruction of textural materials and can be creatively fruitful even when the outcomes are unpredictable.

Implementations

Musical applications of signal decomposition are an emerging commercial market and can be found in tools such as Izotope RX. These tools are expensive, closed-source and relatively hard to gather information on how they function. More accessible and open implementations of signal decomposition algorithms are available, but often require knowledge of a programming language such as Python, and are generally not designed for real-time applications. In this sphere, there are projects such as Northwestern University’s nussl, and the University of Surrey’s untwist, The Bregman Toolkit (see Eldridge et al. (2016) for an application of the toolkit) and Spleeter. A relatively emerging set of tools have been produced by the Fluid Corpus Manipulation (FluCoMa) project, which specifically focuses on the creative applications of signal decomposition. These algorithms have supported much of my experimentation and incorporation of decomposition techniques into my practice.

Conclusions on Machine Listening

There are three aspects of machine learning that are important in my practice. Audio segmentation helps me to automatically derive compositional materials by segmenting long sounds into smaller ones. Signal decomposition can assist me in accessing the inner components of sounds or in finding unforeseen ones. Audio descriptors are the backbone for how the computer listens, by producing data that can describe the content of a signal. The next section, [3.3 Machine Learning], gives an overview of how machine learning fits into my practice and approach to composition.

Machine Learning

Machine learning is a branch of artificial intelligence that focuses on building algorithms that enable machines to learn from data in order to improve their results (Mitchell, 1997). Machine learning algorithms create models from training data, which is often supplied in large amounts. Using the model it creates, a machine can make predictions, find patterns or generate new data based on what has been “learned” through training. Because of this, machine learning algorithms can be used in a variety of applications assuming that there is data that can be learned from in order to solve the task at hand. This can be especially useful where conventional algorithmic approaches are hard to conceptualise or develop. Instead, the computer can learn how to solve the problem itself.

Applications of Machine Learning

In music and audio, machine learning has been used toward a number of technical and creative goals. For example, Tone Transfer made by Google Research allows users to perform “style transfer” between two digital audio recordings. The result is a hybridisation of the two digitally stored sounds, where the timbre from one is transplanted onto the dynamics and expressive qualities of the other. Machine learning has also been used to analyse the structure of music in order to create a model which represents a certain style or compositional method. The model can be used to generate new works that are similar to the ones on which the machine learning algorithm is trained. Work in this area includes folk-rnn (Sturm et al., 2016), for generating folk music, and DeepBach (Hadjeres et al., 2017), for creating works in the style of Bach.

In my practice, I use machine learning in conjunction with machine listening technologies. Machine-listening often produces large quantities of data and this can make it difficult to make sense of that data, or to grapple with it in a humanly meaningful way. Machine learning provides strategies for transforming, analysing and querying such data. Thus, I use machine learning as a way of interacting with and enhancing machine-listening through analysis. Examples of this include:

Dimension Reduction

Dimension reduction transforms data from a high-dimensional space to a low-dimensional space while attempting to maintain the meaningful properties of the original data. There are many algorithms for this that have their own sets of pros and cons. While composing Reconstruction Error, I experimented with several dimension-reduction techniques, such as those outlined in McInnes et al. (2018). The strengths and weaknesses of certain algorithms, and my motivations for incorporating dimension reduction into the technical aspect of my practice, is discussed briefly in [4.4.2.4 Dimension Reduction].

Dimension reduction can help to reduce the size and redundancy of data produced by a large number of audio descriptors, used for the purpose of machine-learning. Reducing the data to fewer significant values is a consequence of the computer automatically determining what is salient in a set of data. Ideally, the result will retain the significant and important statistical features of the original data, while discarding what is statistically insignificant. In theory, when this process is applied to audio descriptor analysis, it can remove data that does not describe meaningful perceptual characteristics of a sound.

This mitigates the need for me to curate specific descriptors which I anticipate will capture and represent the salient aspects of a sound. For example, if I wanted to compare the perceptual similarity of sounds in a corpus, I can build a large dataset from many different descriptors, and use dimension reduction to remove data that is statistically redundant. Ideally, this would filter the data from this analysis that does not describe the most prominent perceptual features when comparing each sound to each other. This reconfigures the relationship between composition, analysis and audio descriptors. Rather than curating specific descriptors which map onto perceptual characteristics, the computer automatically derives this from a collection of potential features. Because of this, processes that follow on from the dimension-reduction stage are inflected by the computer in a way that manually curating audio descriptors does not engender.

Dimension reduction also supports mapping samples into two-dimensional visual spaces, which is discussed later in [3.4.3 Mapping]. It can also be used as a general preprocessor to improve the results of other computational processes such as clustering or classification. Depending on how effectively the dimension reduction algorithm retains “meaningful” data, it can help to solve issues such as the “curse of dimensionality” or to reduce the influence that statistically insignificant data has on other processes that employ distance-based metrics.

Clustering

Clustering algorithms partition data objects (patterns, entities, instances, observances, units) into a certain number of clusters (groups, subsets, or categories) (Xu & Wunsch, 2008, p. 4). What constitutes a cluster is context dependent. Generally, clustering aims to draw together data objects in terms of “internal homogeneity and external separation”, such that “data objects in different clusters should be dissimilar from one another” (Xu & Wunsch, 2008, p. 4).

In my practice, I have used clustering for two main tasks. The first task is to aggregate digital audio samples together based on audio descriptor similarity. Ideally, perceptually similar sounds will have similar audio descriptor values, and will be placed in the same cluster, while dissimilar sounds will be separated into distinct groups. This functions as a compositional device for forming groups of homogenous materials, and a way for the computer to structure my engagement with previously unknown sonic materials. Xu and Wunsch (2008, p. 8) refer to Anderberg (1973), who “saw cluster analysis as ‘a device for suggesting hypotheses’” and asserts that “a set of clusters is not itself a finished result but only a possible outline”. This description of the clustering process is commensurate with my incorporation of it into compositional practice. For me, it is a way of having the computer suggest one possible grouping of material, rather than trying to uncover an inherent feature of the data that may be waiting for the right algorithm and set of parameters to find it. This was part of the compositional process in Reconstruction Error and Interferences, in which clustering informed the way that I approached two large corpora of previously unheard samples. This is discussed in [4.4.2.5 Clustering] and [4.4.2.3 Pathways Through the Corpus].

The second task is to support automatic segmentation based on the similarity of contiguous segments. By clustering together audio descriptor data from highly granular slices of a sound file, a novel form of automatic segmentation can be applied that produces good results for dividing sounds based on significant variations of an audio descriptor or set of descriptors. This is described in more detail in [3.2.2 Audio Segmentation].

Combinatorial and Optimisation Problems

Machine learning can be used to solve optimisation and combinatorial problems without exploring every possible solution. The behaviour of one such algorithm, simulated annealing (SA), is used in Annealing Strategies as a means to discover which combination of parameters in a vast possibility space will create the quietest possible output. My application of this algorithm is discussed in detail in [4.2.2.3 Simulated Annealing].

Signal Processing

Machine learning also finds its way into my practice through signal processing. Some digital signal processing (DSP) techniques leverage machine learning in order to create solutions to problems such as non-negative matrix factorisation (NMF). NMF, like clustering, can be a technique for speculatively exploring sound. In this case, it decomposes a composite audio file into many components that when summed recreate the original. I often find it fruitful to decompose sounds with a technique such as NMF, and to react to the results it produces as a form of sonic exploration. Thus, the decomposition process can guide me to detailed and subtle sounds without having to generate specific sounds from a “blank slate”.

Machine Learning As Exploration In My Practice

Machine learning enables me to explore compositional ideas and hypotheses with the aid of the computer. This process is predicated on communicating compositional problems as data from which results can be extracted. For example, instead of searching for a specific kind of sound in a corpus manually, machine learning can assume the role of a mediator by offering the results from its own searching procedure. Another example would be using machine learning to perform tasks such as clustering, in which samples are collected into perceptually homogenous groups. The results from this can be novel, and help to develop my nascent motivations and questions into fully-fledged concepts, plans and ideas for pieces. In doing this though, I have to relinquish some control to the computer and accept that there are aspects of machine learning that are enclosed as “black boxes” and are impossible to decipher for me. This is a controllable aspect of my practice though, and I can decide at any point what control I offer to the computer or retain for myself.

A framework for conceptualising this range of computer autonomy is “Lose Control, Gain Influence” (LCGI) put forward by Alberto De Campo (2014). The thrust of the article in which this concept is proposed addresses considerations of parametric control for devices and digital instruments with multiple complex and interconnected parameters. De Campo (2014, p. 217) describes LCGI as “gracefully relinquishing full control of the process involved, in order to gain higher-order forms of influence”. By giving up direct control one can unlock new modes of working with the computer toward creative ends that are based on influencing the machine as opposed to manipulating it directly. De Campo argues that new possibilities for interacting with computers emerge by exploring the territory between a state of complete control over the computer, and one in which all control is relinquished. Despite the intended application of this framework toward interface design and instrument control, LCGI is also useful in conceptualising the spectrum of computer autonomy in computer-aided composition with content-aware programs and especially those involving machine learning.

Furthermore, LCGI as a concept foregrounds a set of contemporary uses of computers in art in which computational decision-making sits at the core of practice. Deferring critical processes of selection, curation or realisation does not merely serve the purpose of making artworks easier or faster to produce. This deferral can assist in developing a creative methodology where ideas are explored computationally, letting the computer inflect and shape a process, or even be fully responsible for it. Fiebrink and Caramiaux (2016, p. 18) discuss these types of affordances and how communicating with machine learning algorithms through data can be formative toward creative ends.

By affording people the ability to communicate their goals for the system through user-supplied data, it can be more efficient to create prototypes that satisfy design criteria that are subjective, tacit, embodied, or otherwise hard to specify in code. (Fiebrink & Caramiaux, 2016, p. 18)

Further to this, they reflect on machine learning algorithms as “design tools” which can be used for “sketching incomplete ideas” (Fiebrink & Caramiaux, 2016, p. 18). In this reflection, the nature of creative problems as “wicked design problems” is foregrounded.

Creators of new music technologies are often engaged with what design theorist Horst Rittel described as “wicked” design problems: ill-defined problems wherein a problem ‘definition’ is found only by arriving at a solution. (Fiebrink & Caramiaux, 2016, p. 18)

By communicating with a machine learning algorithm through data, compositional questions do not have to be clear or specified in exact terms, rather, they can be formulated as bundles of machine-derived information from which the computer derives its own suggestions and “answers”. For me, this process manifests in the way I use machine learning to “sketch” ideas. By doing this, I can solve my own “wicked” compositional problems by exploring ideas computationally and by structuring compositional questions as tasks that allow me to bring them into a consistent and graspable form. Throughout this creative process, I traverse De Campo’s LCGI spectrum and fluctuate between giving away much of my compositional control to the computer and at other times retaining total control. For example, I may take some results of a machine learning process and be inspired to compose with them directly. In this case, I retain most of my control throughout this process. In another scenario, I may re-run the algorithm with new parameters, change the data or create new data in order to affect the results themselves. In the latter scenario, I continually give away control to the computer in order to lead me through the exploration process. This kind of iterative compositional cycle has already been discussed in [2. Preoccupations] and is portrayed as a network of events that feed in and out of each other.

Compositional Applications of Machine Listening and Learning

Machine listening has expanded the possibilities of computer-aided composition by enabling the composer to leverage it for sophisticated analytical and machine listening techniques. In this section, I outline various artists’ work where machine listening is used and I reflect on how their approach is relevant to my own practice and compositional interests.

Embracing The Machine In Machine Listening

Modelling human listening computationally is a complex problem and is often an approximation of the deeply entangled psychological processes by which humans interpret sound. Some artists embrace this problem and foreground the digital, inorganic computer behaviours produced by machine listening algorithms as a central aesthetic of their work. The work of Owen Green happily confronts and exacerbates this, pushing bespoke machine listening software to its limits and designing algorithms that teeter on the edge of breaking or that are applied toward difficult (perhaps impossible) listening tasks.

In Race To The Bottom (2019) (see VIDEO 3.2), Green creates several machine listening modules that form a simultaneously cooperative and antagonistic improvisational partner that responds to him bowing a cardboard box. In VIDEO 3.3, Green discusses both musical and technical aims for this work. For him, the use of machine listening in this piece is a strategy for managing form while avoiding the explicit modelling of musical time. At the core of the machine listening system is a series of onset detectors that are prone to over-detecting onsets from a live input signal. This results in an ensemble of rapid impulse generators which are connected to other sound-producing and manipulating modules. Sound-producing modules that are driven by this rapid onset detection impulse generator include a harmonic-percussive decomposition algorithm which Green uses to process each layer in isolation from each other. His goal in doing so, is to be able to morph each component into its counterpart. The harmonic component is processed with ring modulation, using the interval between impulses to control the frequency of the carrier signal. This results in a distortion to the input signal, adding sidebands and artefacts to the sound. The percussive layer is smeared in time using a sample and hold, again driven by the timings generated by the impulse trains. This produces something more “harmonic” for Green, based on his personalised notion that harmonic sounds are things that repeat in time.

VIDEO 3.2: Owen Green performing "Race to the Bottom" (2019).

In addition to Green’s motivations for managing form without specifically modelling time, he presents this piece as a setup where the machine listening program is tasked with interpreting amplitude onsets from the complex textural sounds of bowed cardboard. By doing this, he creates an unpredictable situation — the machine listening implementation is likely to fail — however, the failure is embraced aesthetically and introduces indeterminacy to the listening machine’s behaviour and spontaneity to the performance. This approach foregrounds the aesthetics of machine listening and its behaviour. The machine will accurately and rapidly do what it is instructed to do, but the output is not guaranteed to be understandable, or perhaps coherent in relation to human listening. Instead of trying to mitigate this, Green embraces and lets it inflect the shape of the improvisation.

There are several aspects of Green’s practice with machine listening that do not resonate with my compositional method:

I have no performative practice with an instrument. Many instrumental performers who work with live electronics, for example, Mari Kimura, Ben Carey, Anne La Berge, John Eckhardt, possess a level of virtuosity and training that is beyond my own and I consider this to be essential. Green playfully subverts this notion of instrumental virtuosity by playing a cardboard box. However, there is a level of knowledge that he has acquired in playing, controlling and extracting a range of sounds from the box, which to me is virtuosic and skillful in itself.

I prefer a level of control over unpredictability and indeterminism that is not dependent on a single iteration or performance. Part of my compositional process is selecting outputs made by the computer, such as rendered audio or generated REAPER sessions. I want to be able to do this “out of time” in the studio, rather than experiencing machine listening behaviours in real-time and responding to this.

That said, Green’s work influences me in the way that machine listening algorithms can be pushed outside conventional operation to render creative results. I rarely subvert machine listening algorithms to the extent that Green does but there is a resonance between our practices in how machine listening is used less like a tool and more like a lens for exploring ideas. The application of machine listening does not always have to be for producing results that are commensurate with human listening; rather, as a composer, it can be valuable to observe how a set of constraints or behaviours on machine listening can be explored to reach unexpected creative outcomes. This can be found prominently throughout Annealing Strategies and to an extent in Refracted Touch. This type of exploring with machine listening is foregrounded with FTIS in Interferences.

VIDEO 3.3: Owen Green presentation on "Race To The Bottom".

A Spectrum of Perceptual Congruence

Although machine listening does not always have to produce perceptually congruent results with human listening to be creatively useful, working along a spectrum of congruence can be a fruitful territory where machines produce novel outcomes. With these interests in mind, composers and creative coders have used audio mosaicing and descriptor-driven concatenative synthesis techniques such as that found in CataRT. The central aim of these techniques is to take a source sound and match it to a target using a measure of distance between two or more audio descriptor feature vectors. Given enough diverse source segments, one can ideally produce a new output that is perceptually similar to the target. This process is not perfect, and may result in a sound that does not necessarily resemble the target perceptually. Between the computer perfectly recreating the target sound, and the computer producing results that are unrelated to the perception of the target, there is a spectrum of congruence that serves a wide range of aesthetic exploration.

In Constanzo’s C-C-Combine (see VIDEO 3.4), the process of source-to-target matching is performed with grains of audio as small as 40 milliseconds from the source to form the target. A range of controls is available for weighting audio features in the matching process. This becomes an expressive control because discrepancies inherently found in matching two sounds can be compensated to improve the congruence. If, for example, the pitch is one semitone away in the source from the target, one can ask C-C-Combine to re-pitch the source grain by that difference to match the target better. The same process can be applied to the loudness of the source. Manipulating these compensation constraints can increase the tightness of the matching process under circumstances where the sounds differ perceptually. As the source sounds are compensated, the behaviour of the machine is foregrounded aesthetically and the artifice of that process becomes more evident in the output. Weighting features and controlling the compensation intensity transforms C-C-Combine from an audio effect to an interface for exploring the behaviour of a machine listening algorithm. As Constanzo says in VIDEO 3.5, “we specifically want quantisation error, and the ‘art’ is in how much”.

VIDEO 3.4: C-C-Combine.

Because C-C-Combine exists primarily for real-time use I do not use it in my own work. However, the concepts in its design and implementation resonate with other aspects of my creative coding. For example, Constanzo’s work on selecting and curating audio descriptors for analysis has both strengthened my knowledge in this area and informed my preferences. Furthermore, reading and engaging with the code for C-C-Combine has taught me how the treatment, sanitisation and compensation processes applied to data can affect feature vector matching. In principle, much of this technical research and design can be applied to off-line processing such as in FTIS.

VIDEO 3.5: Rodrigo Constanzo Creative Coding Lab Symposium Presentation.

In a similar vein to C-C-Combine, Ben Hackbarth’s AudioGuide is a program for concatenative synthesis that works by matching a selection of source grains to a target sound. While C-C-Combine or CataRT work in real-time, AudioGuide functions in non-real-time allowing more complex matching schemas and exhaustive computational constraints to be used that are not required to be rapidly scheduled ahead of time. Hackbarth (2010) proposes AudioGuide as a solution to his own compositional problems, including the labour intensive process of constructing gestural material using samples, and the ability to layer sounds densely such that “evocative” sounds reminiscent of “time-varying” acoustic morphologies can be made (Hackbarth, 2010).

AudioGuide offers an extensive interface for shaping this process, allowing corpus filtering, data transposition, normalisation and deeply customisable constraints for sample matching. This design reflects Hackbarth’s vision for answering his own questions in composition, and affords the program a role in constructing long and complex continuous sections of musical material based on such configurations. Hackbarth demonstrates how this interface was used to great effect in VIDEO 3.6 referencing his piece Volleys of Light and Shadow (2014). While AudioGuide requires the user to specify matching and concatenation constraints in an abstract configuration syntax (see CODE 3.1), this provides a powerful interface where the complexity of superimposition and time-varying matches can be defined and expressed. In this sense, the machine listening component, however complex embedded behind layers of configuration it is, becomes an interface where one can show the computer an example and then “guide” the pathway it takes to reach that. In the video below, Hackbarth posits that a program like AudioGuide is not necessarily a new form of tool; rather, it resembles a composer testing out harmonic or melodic structures at the piano and then orchestrating them fully later.

TARGET = tsf('cage.aiff', thresh=-25, offsetRise=1.5)

CORPUS = [csf('lachenmann.aiff')]

SEARCH = [

spass('closest_percent', d('effDur-seg', norm=1), d('power-seg', norm=1), percent=25),

spass('closest', d('mfccs'))

]

SUPERIMPOSE = si(maxSegment=6)AudioGuide has directly influenced the technical aspects of my practice and by extension the creative possibilities. This is evident by comparing the architecture of software I programmed at the start of this PhD research, which was Max and real-time based and the later technical developments which are text-based and mostly use the Python programming language in conjunction with a DAW. A significant influence that shaped this trajectory from AudioGuide is the separation of audio segmentation, machine listening analysis and synthesis (concatenation) into discrete stages of a processing pipeline. Dividing each of these stages is not only pragmatic for development and maintenance, it is an advantageous choice that allows the user to hone each aspect in isolation from each other. This is important because each component in this pipeline has an impact on the following component’s results. For example, segmentation is often the first step in my practice, and the granularity at which this is performed will affect how recognisable the source material is when composing with those segmented units. As such, the separation of different processes becomes a powerful interface for sculpting the overall process and for engaging with sound materials with the aid of the computer.

Additionally, software that operates in non-realtime is conducive for my iterative development cycle between moulding the computer’s behaviour through configuration files and auditioning or experimenting with rendered outputs. As I developed my own tools such as FTIS and its nameless Python-based predecessor, this iterative compositional workflow became prominent and opened up possibilities for composition with sample-based material that was difficult or arduous in other environments such as Max. Storing configuration data in files separated from the sonic materials creates a workflow that allows me to step between the mindset of massaging analytical processes and evaluating sonic outputs. This workflow became an important aspect of how Refracted Touch and Interferences were composed as well as the workflow that FTIS aimed to engender.

VIDEO 3.6: Ben Hackbarth presenting AudioGuide.

Mapping

Mapping is the process of spatially representing data or for constructing relationships between sets of inputs and outputs such as in digital musical instrument (DMI) design (see Kiefer (2014), De Campo (2014), Hunt et al. (2003) and Tanaka (2010)). Machine listening combined with machine learning can be useful for producing such maps automatically.

In Sam Pluta’s more recent work, machine listening is used to transform non-linear multidimensional parameter spaces of chaotic synths to lower-dimensional spaces. Using an auto-encoder that is trained on synthesiser parameters at the input and audio descriptor features at the output, Pluta employs the computer to discover how these two sets of data are related and if there is a simpler representation in terms of perceptual data that can describe the complex and co-dependent connections between the input parameters. This produces an intermediary map between the method of control for the synthesiser and the perception of the sound that is made.

If one imagines that all the possible states of the synthesiser are entangled by co-dependent parameters, a combination of machine learning and listening can “untangle that mess” by projecting it onto a flat surface in which perceptually similar states are positioned around each other and spatially distributed on a continuous sonic palette. This palette can be traversed linearly, while the internal state remains non-linear. In this way, the listening machine solves a problem that would involve a lengthy period of manual trial and error or familiarisation through practice. It also allows Pluta to explore a high dimension synthesis space with a lower number of controls that then are mapped onto them.

Pluta presents this research in VIDEO 3.7. There is also a relevant thread in the Fluid Corpus Manipulation discourse that documents the development of these ideas as they were emerging and opens up some of the questions to a wider internet community for discussion.

I have used a combination of machine listening and machine learning for similar purposes in my work. In Annealing Strategies, simulated annealing is used to discover a configuration of parameters that will cause a Fourses synthesiser to produce the quietest output possible within a given subset of possibilities. As such, SA is used as a mapping technique between the parameters and an audio descriptor that measures the loudness.

VIDEO 7: Sam Pluta discussing his work on synthesis parameter mapping using neural networks.

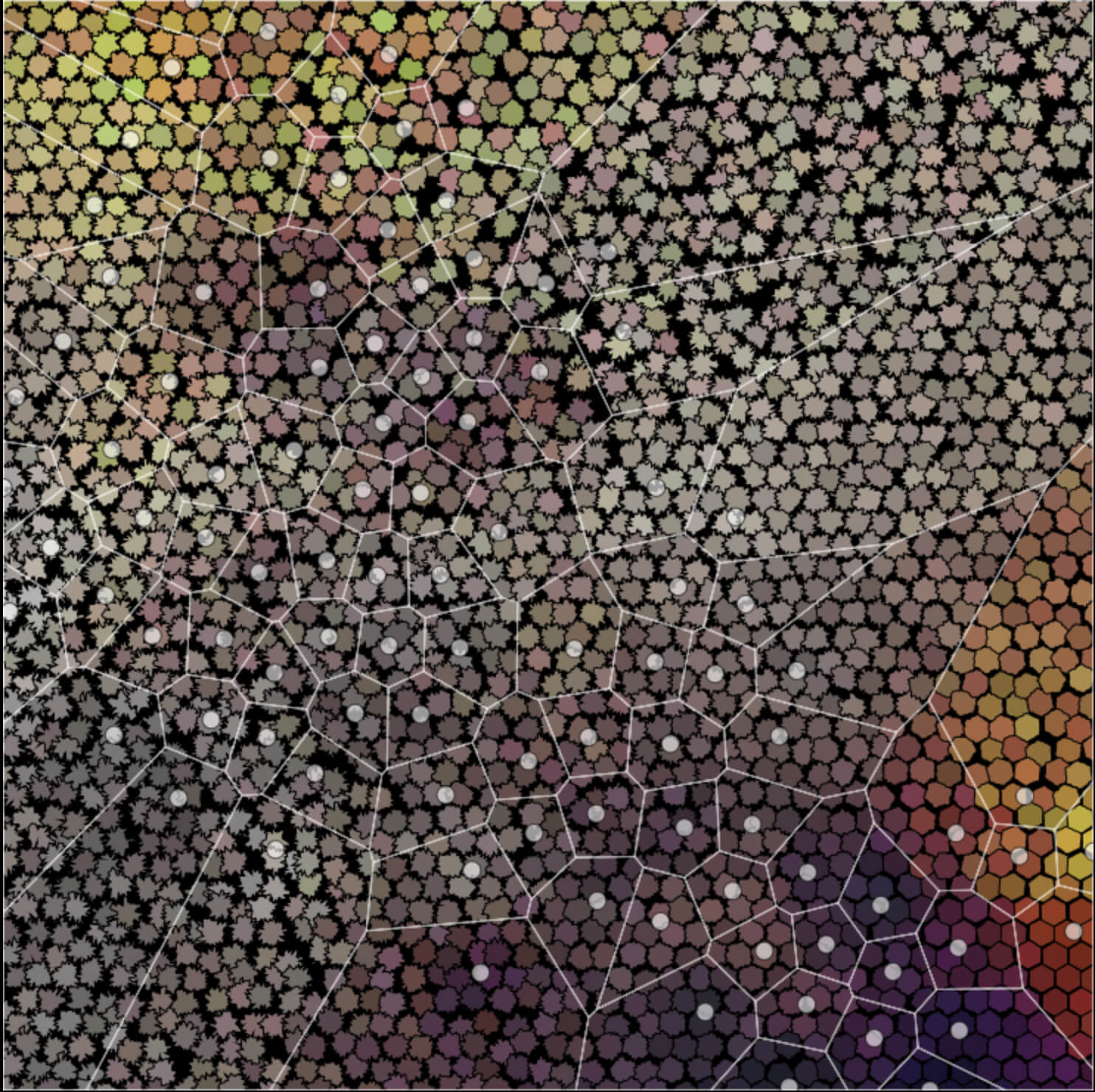

Thomas Grill has investigated how digital audio samples can be projected into two-dimensional navigable spaces based on audio descriptor features. Two papers related to this are Grill, T. (2012) as well as Grill and Flexer (2012). In this work, Grill researched strategies for portraying diverse collections of sounds on visually explorable two-dimensional maps. Using custom high-level descriptors such as “high-low”, “bright-dull”, “ordered-chaotic”, “coherent-erratic”, “natural-artificial”, “analogue-digital” or “smooth-coarse”, a projection is created that attempts to balance these qualities of the sound and to make the map as continuous and perceptually grounded as possible. While much creative coding with machine listening is tuned according to the listening perception of the composer, Grill performed surveys and listening tests with subjects to arrive at certain assumptions about the influence that each of these custom descriptors should have on the final projection. Exploring one localised area of the projected space renders perceptually similar sounds together in space. Increasingly different sounds will be found by moving outward in either direction from a single point. Grill provides an interactive timbre map that lets a user navigate across a projection of some pre-prepared sounds. A screenshot showing the user interface can be seen in IMAGE 3.1.

Thomas Grill’s interactive timbre map inspired my own compositional processes. In Reconstruction Error and Interferences dimension reduction techniques are used to project audio samples to a two-dimensional space. The function of this is to discard audio features that do not explain or account for the differences and similarities between the sounds. In a similar vein to Pluta’s work, the output can be used to simplify the exploration of possibilities, albeit in the form of samples rather than synthesis parameters.

Content-Awareness and Augmentative Practice

When I incorporate a computer program that uses machine listening into my practice I am using it to discover something related to the content of a sound, whether that sound is sample-based (such as in Stitch/Strata, Reconstruction Error or Interferences), uses live electronics (Refracted Touch) or employs digital synthesis (Annealing Strategies). Using content-aware programs to listen is a process where I alternate between experimenting “hands-on” with materials produced by the computer, and devising new listening tasks for the computer. I describe this process as an iterative development cycle. This positions the computer in my practice as an explorative and speculative companion rather than a tool to arrive more quickly at a set of prior knowns. It involves a process of question and answer, or “querying” which sits as a central tenet of how I make compositional decisions.

While computers are excellent at solving problems that are well defined, compositional practice often requires solving issues with shifting criteria for success. As I approach a satisfactory solution to a creative idea, aim or goal, the solution might change, or I might realise the initial question was fundamentally shaped by a perspective that has been challenged through this experience. Therefore, the role of a content-aware program is to foster this compositional process by offering ad-hoc solutions to questions as they arise. Through this process, content-aware programs act as a sounding board and heuristic where the artistic questions that underpin a work can be developed and honed.

I consider this incorporation of content-aware programs in computer-aided composition as augmentative in comparison to practices that are semi-supervised or unsupervised. The next section, “A Spectrum of Autonomy” will outline a variety of projects and pieces that have influenced me technologically and musically and that function to position my practice as augmentative amongst a body of related work that occupies this spectrum of computer agency in creative practice. While these terms are commonly used in the area of machine learning, in this case, I am appropriating them to explain the differences between levels of computer agency and autonomy in creative practice.

A Spectrum Of Autonomy

Fake Fish Distribution (2012) - Icarus

An example of a semi-supervised approach is Fake Fish Distribution (2012) by Icarus. This album “in 1000 variations” is created algorithmically, and each copy of the album differs slightly while sharing some structural features across variants of tracks. Using Max for Live (M4L) the standard timeline environment of Ableton Live is extended so that fixed “keyframes” (as explained in this Sound On Sound interview with Icarus) are represented as edges of breakpoint functions. More detail is provided in another interview between Icarus and Marsha Vdovin. See IMAGE 3.2 for a depiction of the breakpoint function module they used to interpolate between keyframes and VIDEO 3.8 for an explanation of how this system was implemented in Ableton Live.

Interpolating between these keyframes blends the properties of two known parametric configurations, depending on the amount of interpolation. With this technique, the computer can generate variations and hybrids of those precomposed configurations by mixing their characteristics to different degrees. As an external observer, it is not completely transparent to what extent the computer is responsible for deciding how far to interpolate and when to do this. However, the important facet of this generative strategy is that some immediate control over the shape and structure of the music is given away to this automated process. In the Sound On Sound interview, they describe a process of drawing shapes into the breakpoint editor in order to guide the result of that process. As such, I consider this approach to be semi-supervised, as the computer’s agency is a direct extension and control mechanism attached to human control.

VIDEO 8: Icarus (Ollie Bown and Sam Britton) explaining Fake Fish Distribution (2012).

S.709 (1994) — Iannis Xenakis

Another semi-supervised approach can be found in the compositional method for S.709 (1994) by Iannis Xenakis.

VIDEO 3.9: S.709 (1994) – Iannis Xenakis

This piece followed a long series of works that experimented with “dynamic stochastic synthesis” (to which a chapter is devoted in Formalised Music (Xenakis, 1992)), including pieces such as La Légende d’Eer (1977-1978) and GENDY3 (1991). S.709 uses a combination of two programs, GENDY and PARAG to construct the work with a blend of human and machine agency. GENDY generates waveforms sample-by-sample using stochastically bound random walks. These waveforms are algorithmically determined, and the perceptual outcome would be (at least for me) difficult to predict from understanding the algorithm alone. Despite this, GENDY is a strategy for “encompassing all possible forms from a square wave to white noise” (1992, p. 289). For Xenakis, the challenge is to create music “from a minimum number of premises but which would be of ‘interest’ from a contemporary aesthetical sensitivity” (1992, p.295). It is not as if there is no imagined sonic outcome from this approach beforehand; in fact, conforming to a “contemporary aesthetical sensitivity” is itself perhaps a contributing factor toward Xenakis’ exhaustive computational methodology and focus on generating a significant amount of textural variation. PARAG works alongside GENDY by concatenating several GENDY outputs to build a larger formal structure. Xenakis (1992, p. 296) states that S.709 is named after a selected sequence output from PARAG — a single rendering from the system among many possible ones.

For S.709, the computer is engendered with a relatively autonomous role of artistic contribution to the work. Xenakis’ own responsibility is to develop the program. In doing so he encodes his aesthetic desires in a textual format, only being able to interfere by altering this aspect, and to an extent through the arrangements made by the PARAG program. A work such as this is highly parametric much like Fake Fish Distribution. However, S.709 is less supervised, and almost all aspects of composition are given away to the computer to be executed beyond the intervention of the composer, and are only available for modification from a distanced perspective or through the curation of artefacts resulting from the process. Xenakis retains the final say in this work, choosing the “best” output from the computer rather than perfecting a single master rendition that is unaltered after it has been generated. I engaged with a similar process of curation in Annealing Strategies, where the computer was tasked with making many variants from which I selected the most suitable output. Xenakis reflects on this choice to curate the machine output in his conversations with Balint Andras Varga:

Other composers, like Barbaud, have acted differently. He did some programs using serial principles and declared: ‘The machine gave me that so I have to respect it’. This is totally wrong, because it was he who gave the machine the rule! (Varga, 1996, p. 201 )

La Fabrique Des Monstres (2018) - Daniele Ghisi

A contemporary example of an unsupervised approach can be found in La Fabrique des Monstres (2018), a suite of works by Daniele Ghisi. These pieces use SampleRNN (see Mehri, S (2016)), a recurrent neural network that is trained to model the dependencies between samples in digital audio. This can be used to generate new audio samples based on the assumption that the patterning within digital samples can be represented probabilistically. This process is relatively uncontrollable. One cannot intervene with the intermediary computational processes to understand what the computer is doing or what it is specifically modelling from the input. Unfortunately, Ghisi gives few details on his implementation such as what the training corpus was. Nonetheless, we can garner an appreciation and feeling of what the computer has learned from the results it has generated.

For example in FuenfteLetzeLied and PasVraimentHumaine, vocal gestures are artificially extended and elongated by the computer to the point where in some cases no human could sing these passages. My interpretation from listening to these works is that part of the training data must be song cycles or operatic material. What the computer has “learned” from this material is not necessarily stylistic or contextual, rather, it appears to have latched onto the most prominent surface elements of the music and “decided” to proliferate that. In this case, it is the dominating solo voice. At times in Recitation, structures and shapes similar to comprehensible sentences emerge from rapid phonetics that are shuffled and somewhat random. Throughout other passages seemingly sophisticated, although not understandable phrases, are strung together without any breaks. This gives a sense that there is some order and patterning to the individual grammatical elements of the machine-generated language being spoken which is simultaneously subverted by the lack of overall structure imposed on them.

Throughout these works, it seems as if the computer learns some significant aspects from the source material. It is clear when a vocalist has been present in that training data, but often what the computer learns from this is purely statistical and it fails to engage with the aspects of a work that are delicate, nuanced and central to our understanding of them as humans. This produces results that embody a “machine understanding” of the source material that is then aestheticised in the final work. Ghisi exposes this which positions the computer as an unsupervised creative force.

Situating My Practice

In [3.5.1 A Spectrum of Autonomy] practices with different degrees of computer autonomy exercised in creative practice have been described. What binds these diverse approaches is the way in which computation leads artistic investigation and how a dialogue is created between the human and the machine. Different interfaces enable this communicative and dialogical relationship, depending on the goals of the artist and the degree of control they are willing to give to the computer.

For example, Fake Fish Distribution utilises a parametric approach, requiring human input for auditioning and tuning the breakpoint functions and keyframes in order for the computer to produce acceptable outputs. Throughout this process the underlying mechanisms of this process are accessed directly, allowing Icarus to interface with the generative aspect by modifying the way interpolation drives musical processes. Much of the compositional work is performed by deciding ranges for parameters, sculpting automation curves and mapping parameter values to musical controls. Comparing this approach to that of Ghisi’s in La Fabrique Des Monstres, his efforts are invested in influencing the training of the sampleRNN model that will generate sound files. His influence here is limited to serving the machine learning algorithm data to learn from and he relinquishes the ability to go backwards and adjust any set of dials, knobs, sliders or constraints that would otherwise affect the output.

These two artists for me exemplify two ends of the LCGI spectrum when working with computers. At one end, Icarus has created a hierarchical system of control and musical detail that can be modified a low programmatic level. The computer performs the complex task of interpolating many different musical parameters simultaneously, providing variation without specifically having to design it. This would be incredibly hard, but not impossible for a human composer, and Icarus retain control over the nature of this process and have designed it themselves. Ghisi, on the other hand, gives away many of these aspects to the closed and inaccessible aspects of the sampleRNN algorithm. He cannot modify the nature of this artificial neural network beyond changing the input data either blindly or in response to what he hears. Much of his own agency is given away to this process, but it produces some novel results that are aesthetically novel and capture the raw machine-like behaviour of the computer in the sound results.

In [3.3 Machine Learning], I describe how machine learning can be a compositional tool that allows me to explore ideas computationally. This situates the computer as an agentive entity in my practice that can lead me to solutions for compositional problems which are difficult to define. Throughout this process, I am in flux between several processes that engage the computer in terms of different levels of autonomy. With this flexibility, the computer manifests as an augmentative entity in my practice, a notion that resonates with Engelbart’s (1962) position on computing, in which he posits that “humans could work with computers to support and expand their own problem-solving process” (Carter & Nielson, 2017, p. 1). The computer is a co-pilot in this creative process and can help me in ways that are not possible with my faculties alone. Content-awareness in my programming practice shapes this process in a novel way, emphasising the act of computational listening. By harnessing content-aware machines for computer-aided composition, my aims are not to investigate formalist approaches where entire works emerge from algorithmic, rule-based or procedural strategies; rather, the computer has a mediating role between me and creative action: it is a lens that is focused on a set of compositional ideas, materials and strategies that are then acted out cooperatively. My practice is a fusion of human and machine in composition, grounded in machines that listen in order to compose with me.

Concluding Remarks

In the previous two sections, [2. Preoccupations] and [3. Content-Awareness] a number of concepts have been put forward that situate my practice within the wider field of computer-aided composition and highlight the ways that content-aware technology is a significant component of my practice. The next section, [4. Projects] contains five sections. These sections chronologically outline the compositional work in this PhD, and how this set of works explores the employment of content-aware programs in my practice.

Before moving on to the discussion of these individual projects I will posit three “postures” as concluding remarks of the previous two sections and as a way of framing the discussion of the next five subsections.

My practice is computer-aided composition and uses content-aware programs as an interface for expression. This interface is embedded in an iterative development process that helps initially ideas and compositional hypotheses reach internal consistency by engaging with sound materials through machine listening, machine learning and computer-aid.

Compositional materials are discovered through deconstructing pre-existing sound objects (recordings, synthesis). My practice leverages the strengths of content-aware programs to sieve, filter and search collections of pre-existing materials or to have the computer propose notions of “material groups” or formed sections of music derived through algorithmic and parametric means. Content-aware programs structure listening and engagement with materials, providing a strategy for meaningfully discovering, understanding and then incorporating those materials into composition.

As such, my practice is initialised in a process of extraction, one that I assert as oppositional to construction which comes much later once sufficient materials have been extracted. Metaphorically the difference might be described as that between going for a walk and digging for oil. The walk has a notion of a prepared path but diversions can be made along the way depending on what is discovered or observed in the moment. For the walk there are both “known knowns” and “known unknowns” and this process invites both. Digging for oil, on the other hand, tends towards the observation of “known knowns” and represents a process of refinement toward a defined goal.