Refracted Touch

After composing both Stitch/Strata and Annealing Strategies I felt as if the techniques and approaches that were explored in these works had little room to be developed. I discuss this in [4.1.3 Reflecting on Human and Computer Agency in Stitch/Strata] and [4.2.4 Reflecting on Issues of Control and Intervention]. Following on from the completion of Annealing Strategies, the ELISION Ensemble were in Huddersfield as guest artists and I put myself forward to write a piece for Daryl Buckley (Electric Pedal Steel Guitar) and Electronics. Refracted Touch was the outcome.

The patch used in the performance is available in a GitHub repository. It is structured as a Max project, and can be found at /Projects/Refracted Touch/Code/.

Motivations and Influences

A major part of my motivation for writing Refracted Touch was to take the opportunity of working with a professional instrumentalist and performer, Daryl Buckley. Despite the fact that initially I did not have a clear or formal plan for this work, I always envisaged it would be based on improvisation and real-time electronics, connecting Daryl and a content-aware computer system. In addition to these motivations, the previous two pieces, Stitch/Strata and Annealing Strategies had explored compositional workflows in which I endowed the computer with different levels of agency and relinquished different forms of control. I thought that this would be a good opportunity to see how a real-time, interactive content-aware system might be able to foster different modes of agency and a different compositional workflow.

As such, this piece is improvisational and grounded in Daryl’s interaction in real-time with the computer which dynamically generates responses depending on what he plays. The machine-listening component of the piece is based mostly on detecting and measuring changes in amplitude, as well as recycling the real-time input of the slide guitar in several ways. To structure this improvisation, a loosely pre-composed form is flexibly stretched or compressed by Daryl, by altering the improvisational character and behaviour of his playing between soft and loud extremes.

This piece stands out in the portfolio of this PhD because it was the least successful in terms of developing my compositional workflow and exploring the role of the computer in my practice. Most of all, this piece helped to clarify further what I am willing to relinquish to the computer, and what aspects of composition I want to retain control over.

Musical Influences

Daryl and I worked on this piece remotely until the rehearsals just before the performance of the piece on the 29th of April, 2019. Given that we both live in different countries, our main method of communicating and sharing ideas involved my asking questions over e-mail and Daryl responding by providing recordings and videos of techniques and sounds that he could produce. This was a significant source of inspiration and guidance for me because it outlined certain constraints on what was possible, and also gave me ideas about what the electronics might do such that their interactions with Daryl would be aesthetically coherent.

In addition to the footage Daryl provided, I listened to and watched as much material of his playing that was available online, examples of which can be found in VIDEOs 1, 2, 3, 4 and 5.

VIDEO 4.3.1: Matthew Sergeant in conversation with ELISION's Daryl Buckley (1 of 2).

VIDEO 4.3.2: Matthew Sergeant in conversation with ELISION's Daryl Buckley (2 of 2).

VIDEO 4.3.3: The Wreck of Former Boundaries (2015) – Aaron Cassidy

VIDEO 4.3.4: Dark Matter: Transmission (2012) – Richard Barrett

VIDEO 4.3.5: Lichen (2007) – Matthew Sergent

I have limited experience playing the guitar but not extensively and so I would consider myself to be an amateur. I experimented with extended techniques and preparations such as bowing with a cello bow, inserting nails and paper clips and attaching pegs to strings. Despite not being able to replicate Daryl’s playing, or reproduce the specific techniques unique he uses on the pedal steel guitar, this provided a way of having tangible experimentation outside of only using digital samples. Working almost entirely remotely and only with test samples, the piece remained in a highly speculative state for much of its development. This had a significant effect on the outcome because I was never working with the exact sounds that would feature in the performance.

Machine Listening for Formal Development

Dealing with compositional structure with the aid of the computer has been a focus in all of the projects so far in this PhD. In Refracted Touch, I envisaged that the computer would be able to influence the form of the piece dynamically by operating within predefined constraints determining interactive relationships and configurations. As a result of Daryl’s improvisation with the machine, I imagined, specific micro- and meso-level events would not have to be designed but would emerge from content-aware responses produced by the computer. I looked to other works where live electronics and interactive systems were used to structure pieces in-the-moment and where the computer was responsible in some way for the structural and temporal aspects of a work. In this regard, Martin Parker’s set of gruntCount pieces were influential. Parker describes the gruntCount system:

Each edition of gruntCount is personalised from the outset, with composer and performer working together to produce the elements of a system for creating well-defined and structured musical pieces that invite liberal performer input, spontaneity and intuition. This preparatory stage usually involves a period of system “training”, in which the composer responds in real time to free improvisations and creates a set of interrelated digital sound processing (DSP) parameter presets unique to the player… Having designed these settings, the compositional agenda proceeds with the plotting of various journeys or curves through the DSP settings. These curves may resemble a graph or automation curve, but in fact represent specific trajectories through a parameter space, which itself has nested settings within it. There is a formal design here, a quality and style, and yet the manner in which the piece is individuated is entirely defined by the live performer, whose physical efforts (or grunts) move the assemblage forward. (Parker & Furniss, 2014, p. 1)

The technicalities of the gruntCount software are straightforward. An onset detector is tuned to detect “grunts” generated by the improviser, which progress the Max patch forward through a set of predefined states. These states are flexibly designed by the improviser. As such, there is a negotiation between what the performer creates beforehand and how that is navigated in real-time by triggering grunts. This is relatively simple, but powerful in creating variation and fostering expression through interaction. Furthermore, it blends the intuition and musicality of the performer while creating a space in which the performer can explore the predefined sound world interactively within a set of formal constraints. Parker gives a demonstration of how the patch works in VIDEO 4.3.6

VIDEO 6: Martin Parker demonstrating how to configure and run gruntCount.

Similarly, the work of Owen Green was also influential to me. I largely drew on signal processing concepts from two pieces, Danger in the Air (2013) and Cardboard Cutout (2013). Danger in the Air features live electronic components that adaptively respond to the input of the performer and occupy a range of agent-like behaviours from sound-processing module to co-player. From this work, I was inspired by the way that Green made the electronic processing transform throughout the piece by having the real-time granular synthesiser’s parameters adapt to the sound of the input through audio descriptor analysis. This adaptivity and temporal evolution was something that I wanted to capture in my efforts. Cardboard Cutout, uses signal decomposition to separate harmonic from percussive components in a live input signal, in this case, a bowed cardboard box. Each of these layers is processed with granulation, and like Danger in the Air, the granular synthesis adapts and is responsive to the qualities of the input signal through the use of audio descriptors. Green’s approach to time and form in this work is constructed as a negotiation between pre-composed sections and the behaviour of the performer, the latter driving a sequencer that moves between those sections. Green describes his approach as follows:

The modulation of the various processes at work in the patch is driven, in large part, by a sequencer that moves through a series of pre-defined steps… The progression of the sequence is still driven by player input, however, so whilst the ordering and certain temporal aspects of the electronic processing are determinate, their precise pacing is adaptive. (Green, 2013, p. 195)

In the later versions of the patch for Refracted Touch, I implemented something similar, where pre-composed states are progressed through according to the accumulated “energy” of Daryl’s input signal. Most importantly, the approaches of Green and Parker focus on how they can balance the liveness of content-aware improvisational systems and their control over such systems. This was an aspect that I had not explored in previous pieces and I wanted to experiment with it for myself.

The Patch

Refracted Touch was composed by iteratively developing a patch using pre-recorded materials derived from my guitar playing and from Daryl’s as test inputs. These versions are not necessarily functional performance patches and can be considered intermediate testing, trialling and sketching that culminated in the final version. This section briefly describes what each patch version does and situates it in the development of the piece. I will separate this section into two sub-sections, [4.3.2.1 Earlier Versions], which describes all of the versions leading up to the final version, and [4.3.2.2 The Final Version and Performance] which considers in more detail the final form of the patch and the results it produced in the first and (at the time of writing) only performance.

Earlier Versions

Version One

I began composing Refracted Touch by collecting and making sounds, recording them and then building signal processing modules with those sounds as test inputs. These modules were not conceived merely as digital audio effects, but as self-contained processes that would produce sound in adaptive and responsive ways using audio descriptors and signal processing. I have provided some context for interactive electronic works by others, in [4.3.1.2 Machine Listening for Formal Development] that influenced me to pursue this, and much of my thinking and experimentation at this early stage of the piece was based on looking at Green and Parker’s code, listening to their work, and drawing those sources into my own experimentation and programming.

The first module I created was a harmonic-percussive source separator. I started with this module as an intuitive choice and experimented on the assumption that aspects of amplified slide guitar playing from Daryl could be separated with this decomposition model and treated in isolation. I reasoned that this might give me access to distinct sounds within his playing which could be exploited by the computer. For example, Daryl often strikes the strings of the pedal steel guitar with metallic objects such as a bar or slide. This technique has a strong percussive attack followed by the harmonic resonance of the amplified guitar with some feedback. I wanted to see if these elements could be separated from each other in the electronics, and if this could render new material or function as a standalone form of processing, akin to a guitar effects pedal. VIDEO 4.3.7 gives an example of this decomposition process on some sampled pedal steel guitar playing with heavily detuned strings.

I found the percussive extraction the most novel of the two components. When the harmonic component was removed, the expression of articulations and attacks was transformed, and the identity of the source was changed radically. I decided to keep the percussive and harmonic components in buffers, thinking that this partitioning of the input sound might become a central aesthetic feature of the piece as it developed. I imagined that Daryl’s playing would be decomposed by the computer into these distinct components and restructured into new sounds — “refracting”, in the metaphorical sense, his interactions with his instrument (or “touch”). In the first version of the patch, the last few seconds of incoming sound was continually being separated and stored in memory. This construct persists through the various versions of the patch, and real-time signals of source-separated sounds are used by other sound-producing modules. The following list recounts these modules and outlines their sound-producing capabilities.

Short Resonators (demonstrated in VIDEO 8)

- 32 CNMAT

resonators~in parallel excited by a noise source. - Each resonator can be set by providing a list of three values, the frequency, the gain, and a triplicate that determines the decay.

- The harmonic component is analysed by the

sigmund~object to produce a set of data describing the sinusoidal components. This gives the frequency and relative gains of those sinusoids which are then used to control theresonators~object. - An amplitude-onset detector triggers an envelope that allows the

resonators~object to sound momentarily when the guitar is hit. - The intended effect is that the

resonators~object will resonate “in tune” with the guitar, albeit imperfectly due to the discrepancies between thesigmund~analysis and the perceived pitch, creating a hybridised sound between the guitar attacks and the parallel filter bank resonance.

Metallic Feedback

- This module is composed of two identical feedback comb filters that are connected in series.

- They have two parameters: a delay time and a feedback amount.

- They are self-modifying: as the output gets louder, the feedback amount is lowered. The feedback will never exceed 1.0 and attenuating it in this way helps to prevent it from becoming consistently overpowering as well as creating slight variation in response to the input signal.

- The delay time is modulated to a random value between 1 and 7.9 milliseconds for the first metallic feedback module and 7.34 and 9 milliseconds for the second one. The different delay times and the way that they are connected in series results in complex morphing properties to the processing, ranging from tight feedback “plucks” to longer metallic resonances.

- The output of the short resonators is connected to the input of the first feedback comb filter, making an already resonant and tonal sound become temporally stretched, metallic and giving the impression that material is being recycled.

Granular Synthesis

- The portion of the most recent input from the percussive component is used as the source for a granular synthesis module. This enables the granular synthesis source material to be updated dynamically, as well as creating a feeling that input material is being recycled by the computer. The parameters for this are manually fixed to create a textural companion to the input sound. A demonstration is provided in VIDEO 4.3.10.

Cubic Non-linear Distortion

- This module is a cubic non-linear distortion module that in addition to adding harmonics to the input signal can alter the dynamics of the input sound.

The gen code for this distortion module is shown in CODE 4.3.1, which I extracted from the original set of patches created by Pete Dowling. These can be found at the cycling 74’ forums in a thread title “Valve Distortion”. A demonstration of the distortion module can be found in VIDEO 4.3.11.

cnld(xin, offset, drive) {

offset = clip(offset, 0., 1.);

drive = clip(drive, 0., 1.);

pregain = pow(10., (2. * drive));

x = clip(((xin + offset) * pregain), -1., 1.);

cubic = x - (x*x*x)*(0.3333333333);

return dcblock(cubic);

}

/* + 1x or 2x more dcblocker after downsampling process */

out1 = cnld(in1, in2, in3);Alongside the sound-processing modules, I began creating higher-level modules that could alter the parameters of the sound-processing modules over time. These high-level modules could generate events (such as those from onsets), or drive modifications to parameters in response to changes in the input signal measured with audio descriptor analysis. I first experimented with an approach of accumulation, whereby the amplitude of the incoming sound is measured and accumulated up to a specified limit. Once the limit has been reached, the accumulator resets and triggers a generic event that can be used to cause changes elsewhere in the patch. An example of this is demonstrated in VIDEO 4.3.12.

From this process, different interactions can be set up. For example, the envelope length of the resonator module is based on the interval between resets of an accumulator which listens to the harmonic component of the harmonic-percussive source separator. The more harmonic energy that is put into the system, the longer the envelope becomes. When that same accumulator resets, the resonator itself is toggled between the on and off state. My thinking in this regard, was that this type of interactive behaviour would make the resonator module “playable” over longer time scales, and would not restrict the interactive relationship between Daryl and the computer to localised changes in his playing. In the first version of the patch, I noted some imagined applications where this idea of accumulation might be useful. These are described in CODE 4.3.2.

The timers could be used for...

--------------------------------------

1. Controlling the rate of something

2. Establishing envelope lengthsThe bangs could be used for...

--------------------------------------

1. Engaging long-form changes

2. Turning elements of a system on/off

3. Creating harmonic swells (resonators~)The raw signal could be used for...

--------------------------------------

1. Pushing an envelope forward

2. Scrubbing through a buffer

3. Alternating between resonator banks

4. Pushing the prevalence of certain systems up/down (long-form stuff)This idea of accumulation appeared in the first version of the patch and remained at the periphery of the work across different versions. In the final version of the patch, it evolved as a pivotal mechanism for driving form through the interactions between Daryl and the computer.

This first patch version primarily functioned as a process for building an aesthetic and compositional language for the piece, as well as experimenting with how I might combine computer-generated materials and Daryl’s pedal steel guitar playing. A significant aim within this experimentation was to develop some strategies for interactively controlling the sound-producing modules. For these to be successful, they would have to produce aesthetically preferable results to me, and also be meaningful and engaging for Daryl in his interaction with the computer.

Version Two

In the second version of the patch, developments were made to how the granular synthesis module works. I found that although I liked the idea of granulating the percussive component of the input signal, the result was often very homogenous, especially with larger grain sizes. To mitigate this and to increase the expressiveness of the granular synthesis module, I made it able to modify its own parameters in response to the input signal. For example, instead of constantly triggering the generation of new grains at a fixed rate, this aspect would be determined by the amplitude of the input signal, so that louder playing would make the generation of grains faster. Similarly, the time offset of grain generation (at what time point in the buffer the grain starts) is dependent on a phasor that increases in speed with amplitude. The intended effect of this was that the overall intensity of the input sound could soothe or excite the granular synthesis module as well as behaving coherently with Daryl’s playing. Transposition is also modulated in the same way. IMAGE 4.3.1 depicts the annotated patch for this module.

In addition to the granular synthesis changes in this version, a new module titled low was created. This module consisted of an onset detector designed to respond to changes between consecutive spectral frames, which is connected to a playback mechanism. The onset detector was capable of detecting articulation changes when the pedal steel guitar playing involves heavily detuned strings, or when the pitch is synthetically altered by pedals. When these onsets are triggered, the module selects random sections from the harmonic component buffer and plays them back at a reduced playback speed. This idea was influenced by the way that Daryl would strike heavily detuned strings to create metallic almost non-instrumental sounds in his recorded improvisations. I experimented by stretching recordings of this type of playing out in REAPER and liked the way that this could transform attack-based material into textural material. In real-time, I imagined that this would provide a contrasting behaviour to Daryl’s often erratic style of playing.

The second version of the patch involved focused experimentation aimed toward establishing increasingly detailed methods of interactive control and also introducing some additional sound-producing modules. Overall, the compositional efforts in the second version can be characterised as honing the conceptual ideas for the piece, and incrementally developing the aesthetic language that I had begun to construct.

Versions Three and Four

Versions three and four are very similar, with the later version mostly implementing fixes to problems and changing the cosmetic aspects of the patch. As such, I have decided to consider them together here. These versions began to progress towards the finalised patch and piece. Several modules were added, and there was an additional focus on creating mechanisms from which form could emerge through the interaction between Daryl and the computer.

Some new modules with highly specific sound-producing capabilities were added to the patch. These and the existing modules were assigned to one of four different module groups. The rationale for this was in part pragmatic, so that each group could be switched on and off dynamically to conserve processing power. On a structural level, this grouping was also representative of how I planned for the computer to shift dynamically between different states of operation. As a result, each group of modules produces a different musical behaviour and is predicated on responding to different aspects of live input from Daryl. I outline these module groups below.

rt.bits

This group performs real-time, descriptor-driven concatenative synthesis. After experimenting with granular synthesis, resonators and feedback, I also wanted to incorporate some of the samples I had made with a dobro guitar into the computer’s synthesis possibilities. These samples are short with fast and pluck-like dynamic profiles. Thus, this group aims to assimilate with and weave into Daryl’s playing by generating gestural and textural streams of concatenated “bits”.

rt.harmfoc

This group initially included the resonators module from version one alongside a new spectral freeze. After some deliberation, I removed the resonators module, finding that the spectral freeze module was potent enough at creating sympathetic harmonic responses from the computer. The aesthetic aim of this group was to have the computer hone in on and focus on the harmonic aspects of Daryl’s playing, extending this specific spectral component in time beyond its often ephemeral existence and perception.

rt.low

This group added two new companions to the low module described in [4.3.2.1.2 Version Two]. The first is an additional response to the onset detector which focuses on low-frequency sounds. When an onset occurs, a fuzz-bass sample is played back to supplement the slowed down playback of harmonic material. The second is a feedback delay network with a heavy compressor situated between its input and output. This second addition was created by heavily modifying the metallic feedback module from version one of the patch. This module group is specifically designed to respond to changes within the low-frequency spectrum of Daryl’s playing. In many ways, it shares a similar aesthetic motivation to rt.harmfoc, in that it aims to proliferate and build on a specific perceptual component of Daryl’s playing, albeit focused toward frequencies occupying the low-register.

rt.disruptor

This last module includes the cubic non-linear distortion module and the granular synthesis module. Daryl’s playing manages to achieve sounds that appear to have been produced digitally or with a synthesiser, despite originating from his pedal steel guitar. I wanted some possibility for there to be mimicry between those types of sounds and something generated by the computer. To realise this, I introduced the Fourses synthesiser from Annealing Strategies as a module. This group of modules can produce sounds that are wild, chaotic and untamed. I envisaged that this module would function as a “disruptor” in the improvisation between Daryl and the computer, creating antagonistic responses and harsh synthetic material.

Up to this point, I had intended that the formal aspects of the work would be governed by the state of the modules. Switching them on and off, or modifying their parameters dynamically, seemed to me to be the only way to shape the piece through the interaction between Daryl and the computer. Each module had been designed and conceived with certain material types in mind, so I theorised that a “state” detector that could alter the internal components of the patch in response to the input of the guitar signal could be a way for the machine to be dynamic, while also being cohesive with Daryl. In addition to this, I envisaged that this strategy might introduce a level of flexibility for Daryl and provide him with a way to interact with the computer in order to influence the form of the piece. Depending on his in-the-moment listening, Daryl could decide to maintain a single idea for as long as needed or to explore the localised behaviours of a state before moving on at his own pace. From the inverse perspective, I also imagined that this would give the computer some ability to be more divergent in the construction of form in real-time by creating a kind of “game”, whereby Daryl could either work with or against the behaviour of that process. From this complex interaction, I imagined that a sophisticated formal structure operating on many levels of granularity would emerge. Such formal preoccupations are discussed in [2.2.3.1 Form and Hierarchy].

In my first attempt to realise this, I used the MuBu Max package, specifically the mubu.gmm object. This object uses a gaussian mixture model to compare live input data against a set of trained exemplars and produces a set of values that determine the confidence it has that the incoming data is represented by the trained exemplars. This is shown in VIDEO 4.3.13. I used mel-frequency cepstrum coefficients by analysing audio files of extended techniques provided by Daryl to attach audio descriptor analysis to those exemplars.

Using audio samples as input, I tested this in practice by having the classified state turn groups of modules on and off. I found that the classification process was extremely temperamental. Sometimes even the same input data would not necessarily be classified in the same group that it was trained as. Furthermore, the confidence output was too brittle to determine or measure the balance of states because the results were often heavily weighted towards one result. I envisaged that an interesting interactive relationship might emerge if Daryl could blend the musical behaviours of two states, and have the computer synthesise appropriate generative responses that hybridised aspects of both. However, the reality of performing the right type of material to do this, and for it to be measured accurately, began to introduce several contingencies that I would not even be able to test until the rehearsal. As such I decided not to develop this idea further.

In the fifth and final version of the patch, I removed the state classification with machine learning from version four. However, I still wanted to leverage this idea of the computer automatically shifting between states where different modules were activated or deactivated in various combinations. To do this I took a much simpler approach in which a set of pre-composed states are progressed through in a preset order. The duration of each state, and thus the rate at which these are navigated through, is dependent on a process of amplitude accumulation, a mechanism that was experimented with in previous versions. While each state is active, the amplitude of the input signal is accumulated up to a specified limit. Once this limit is reached, the state ends and the next one in the linear series begins. Playing quietly could make a state last longer than playing loudly, and stopping playing entirely could extend a state infinitely. Each state enables different combinations of modules from the four groups. This constraint governs the overall nature of the piece at the highest level of form, while micro- and meso-level detail emerges from Daryl’s interaction with the specific modules and the relationships they are designed to have with the input signal.

Overall, the development that I undertook to create version three and four of the patch, represented a shift in my attention toward the higher-level compositional aspects of the work. I was moving away from designing sounds and building a sound world, to thinking about how the form and structure of the piece could evolve and emerge dynamically through interactivity. As such, the patch began to crystallise at the module level, with many of them becoming fixed in their module groups and remaining unchanged going forward (aside from small tweaks and tuning). While I initially imagined the patch and piece to be more open and less directed by a linear structure, I found it too difficult to implement this degree of openness merely by designing interactive behaviours, particularly because I was mostly working in the abstract with test inputs.

Final Version and Performance

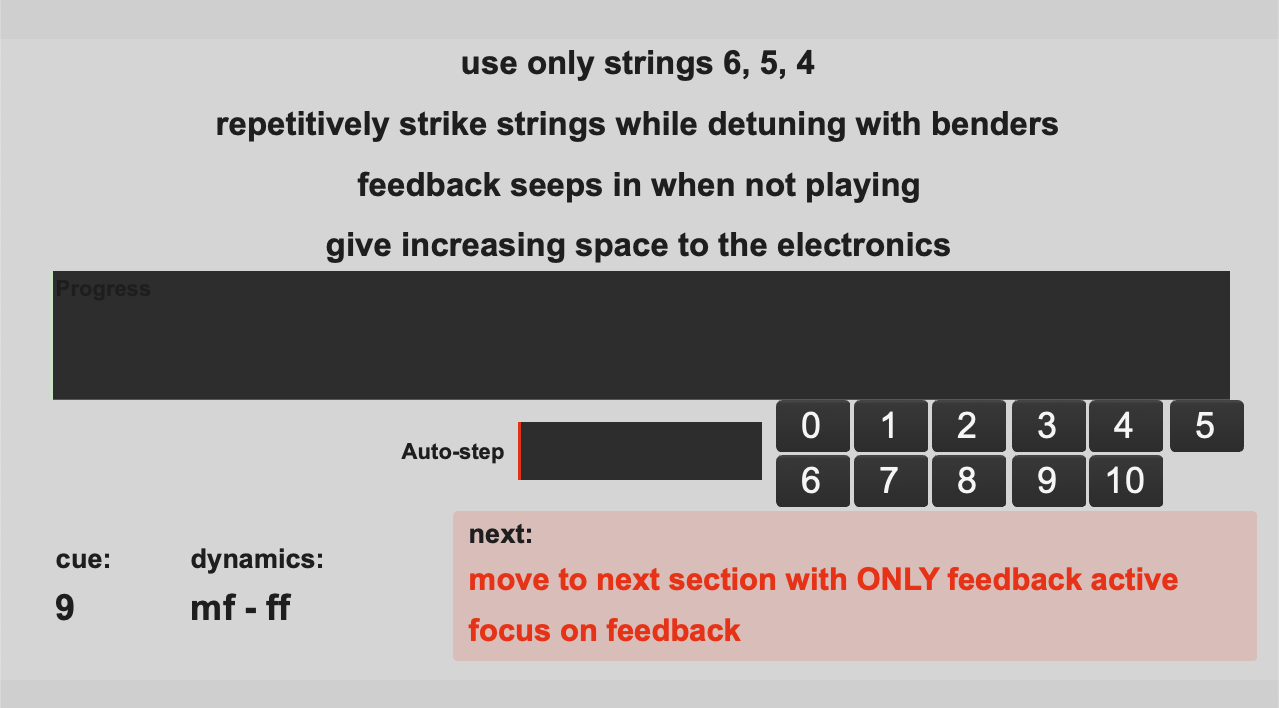

Each pre-composed state is also accompanied by a set of text-based prompts that were delivered to Daryl remotely. He used an iPad in the performance, where the text was presented to him in real-time alongside other information such as his current “progress” through a state. This is depicted in IMAGE 4.3.2.

IMAGE 4.3.2: Daryl's web-based interface for receiving real-time information about patch states. Text-based prompts can be seen in the middle/top-half of the screen. A large progress bar occupies the centre showing his progress through a state.

I found this overall system malleable and simple to explain to Daryl. If either of us wanted a state to last longer overall, all that was required was to increase the limit for the state. Through this simple approach there was enough flexibility for Daryl to shape the structure of the piece while still being constrained by the deterministic aspects that I imposed by ordering and designing the states.

Patch States and Performance

To discuss the relationship between patch states and the navigation between them negotiated by Daryl and the computer, it is relevant now to look at the performance itself and to observe how each state manifested itself and the kinds of musical behaviours that emerged. The following recording is taken from the first performance of the piece which occurred in St Paul’s Hall at the University of Huddersfield on the 29th of April, 2019. The setup of electronics and amplification is detailed in IMAGE 4.3.3. Despite having access to the raw electronic outputs from the recording, I decided not to use them here and instead have used a recording from a single X/Y pair of microphones situated in the centre of the room. I think that this gives a more natural representation of the performance and how the speaker positioning would have affected the experience of hearing the piece.

IMAGE 4.3.3: Electronics, microphone and amplification configuration for the first Refracted Touch performance.

The following list enumerates the states and identifies the times in the recording where they start and finish. The maximum accumulation value is given for each state, as well as the instructions that were delivered to Daryl on his iPad.

One | 0:00 - 1:01

- Maximum Accumulation: 75

- Use high to extreme register mostly

- Use volume pedal to blend effects + natural sound

- experiment with dynamics - processing crushes more when louder

- small, delicate articulations

Two | 1:01 - 1:09

- Maximum Accumulation: 75

- Extremely light, fragile articulations

- Occasional intense bursts

- Guitar samples are matched to onsets

- Slide + bar percussively

Three | 1:09 - 2:06

- Maximum Accumulation: 900

- “Catch” the electronics, play off their behaviour

- More frequent bursts of intensity

- Use more range

For these first three states, the electronics occupy a background role and the overall dynamic of both Daryl and the electronics is intended to be quiet. I wanted the guitar and the electronics to be hard to discern from each other and to be interwoven texturally and behaviourally. There is light processing from the cubic non-linear distortion throughout, and states two and three progressively introduce modules from the rt.bits group of modules. As discussed above, these modules perform descriptor-based concatenative synthesis using samples of extended techniques on a dobro guitar and from modular synthesis. The samples are selected and concatenated based on measuring the input signal of the guitar in terms of spectral centroid and amplitude, with the intention that the morphology of the sound produced from this process will be matched to Daryl’s playing. In addition to this, from state three onwards, amplitude onsets from Daryl trigger pre-composed gestural controls that dictate the selection of samples. This is demonstrated in AUDIO 4.3.2, a selection from 1:10 to 1:40, which has been isolated in the performance recording.

Daryl also has a small amount of accumulation to play with in the first two states, which encourages him to be reserved compared to the rest of the piece.

Four | 2:06 - 2:33

- Maximum Accumulation: 900

- Increase intensity of articulations

- Patch will freeze harmonic moments, exploit this

- Granular synthesis will intermittently join you, work with it when it does

- Onset-based electronics now follow your intensity

This fourth state functions as a bridge to the next state. Daryl is given significantly more accumulation to play with, meaning he can diversify the instrumental techniques he employs, and can explore louder playing without progressing the state too quickly. At the same time, the electronics are increasing in intensity and additional modules are introduced, such as the spectral freeze from rt.harmfoc as well as the granular synthesis from rt.disruptor. Daryl exploited the intense nature of the granular synthesis to generate an unrelenting texture that is noisy and overpowering. Due to the positioning of the speaker emitting the electronics, this texture is diffused into the room and blends with the other sounds.

Five | 2:33 - 3:23

- Maximum Accumulation: 2000

- Use a range of techniques

- Wild, explosive gestures

- Synthesiser changes with onsets

- Work against and with preset changes

State five is the first major shift in the piece in which the modules from the first four states are mostly turned off and a new combination of sound-producing and sound-processing types is enabled. Most significantly, the Fourses synthesiser becomes active and starts randomly selecting from pre-designed presets in response to amplitude onsets from Daryl. This interaction plays out well and is apparent in the sound output, whereas in previous states the interactions are somewhat concealed and more difficult to detect from the listener’s perspective. Daryl’s control created some satisfying moments of tension and space. At 3:41 he causes the Fourses synthesiser to change preset and immediately restricts his playing, allowing a moment of reprieve in which low-frequency oscillations are diffused into the performance space. Just before this, at 3:32, Daryl seemed to be avoiding triggering an onset in order to create a small passage that gradually increases in intensity over time. This range of play with the onset-detection mechanism allows Daryl to structure the micro- and meso-scale form within this state through his decision-making and playing.

Six | 3:23 - 4:20

- Maximum Accumulation: 500

- Do almost nothing in this section

- Let the synthesiser take the foreground for some time

- Perform intermittent interjections to activate the granular synthesis

- Ornament the synthesiser

State six has the role of setting aside some time for a reprieve in the piece. I introduced it in order to give the listener and Daryl a break from the intensity of the sounds that came before in state five, and for him to assume a more relaxed and background role amongst the static texture produced by the electronics.

Seven | 4:20 - 6:03

- Maximum Accumulation: 3000

- Use only strings 6, 5, 4

- Repeatedly strike strings while detuning with benders

- Low drone follows your playing

Daryl continues to extend and reuse techniques and gestures from state six. I imagined that this state would encourage more tightly coupled interaction, by having Daryl trigger the rt.low module repeatedly while striking low detuned strings. Instead, he compromised between these instructions and the relatively relaxed nature of state six to create a sparser rendition of what I intended. From this, a range of coupling and decoupling behaviours between him and the computer is explored by switching between registers of his guitar. The high, almost shrieking gestures do not activate the rt.low module, and Daryl exploits this to improvise independently before moving to the lower register of his guitar to re-integrate with the computer.

Eight | 6:03 - 7:08

- Maximum Accumulation: 3000

- Use only strings 6, 5, 4

- Repeatedly strike strings while detuning with benders

- Electronics recycle material when you are not playing

- Create a dialogue with electronics

State eight is somewhat of an extension to state seven in that all the same modules are active except for an additional sub-module of rt.low that plays back buffered harmonic material at a slow playback rate. Due to the reverse-facing speaker, this creates diffuse and ambient textures. Daryl continues to alternate between different registers in order to play around the interaction of onsets derived from low-frequency playing.

Nine | 7:08 - 8:39

- Maximum Accumulation: 3000

- Use only strings 6, 5, 4

- Repeatedly strike strings while detuning with benders

- Feedback seeps in when not playing

- Give space to the electronics

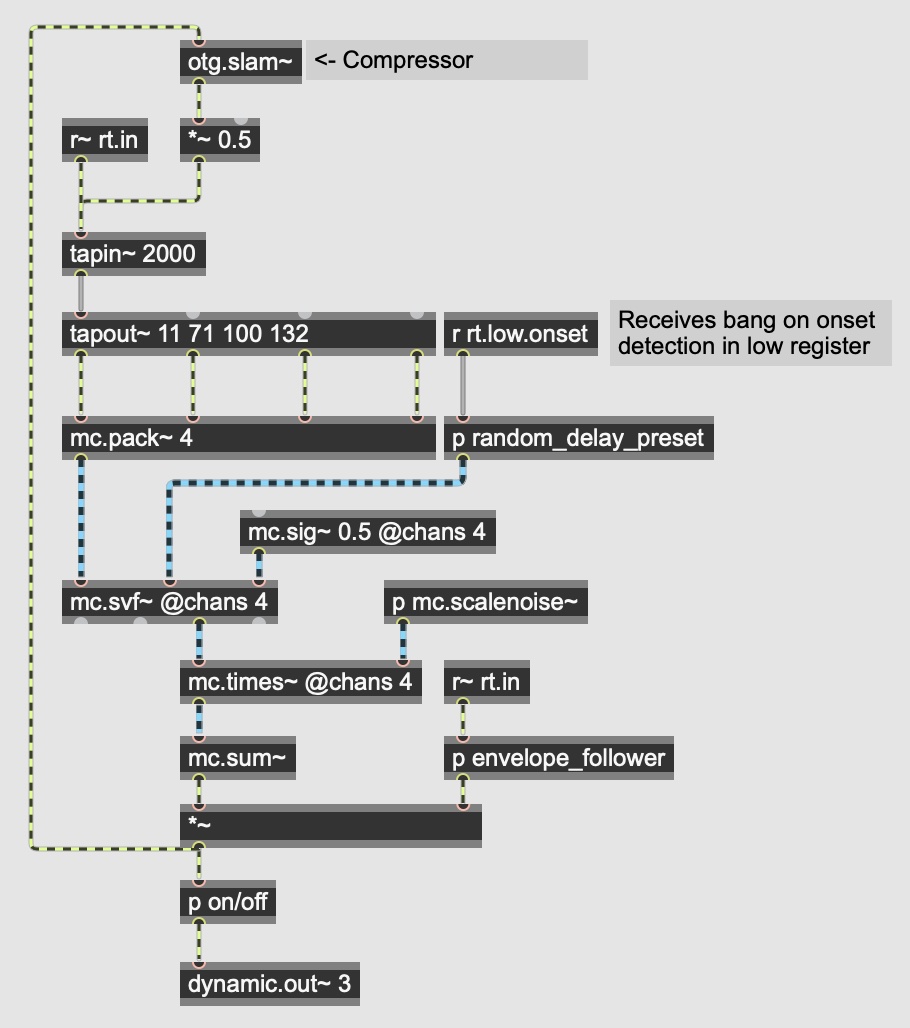

The feedback module of the rt.low group enters the foray in state nine. IMAGE 4.3.4 shows the patching for this module, which uses four delay lines connected to filters and a compressor to attenuate the volume and intensity of the feedback automatically. The delay times dynamically change in response to onsets by selecting one of several predetermined presets.

Daryl sparingly activates the fuzz bass module and generally responds directly to the feedback sound by matching it in intensity. Through his focused interaction with the feedback module, Daryl tapers the dynamics of this state towards the next one by simply waiting and choosing not to behave in a way that would excite the feedback delay network too much, trigger the low fuzz sound, or create a low-frequency onset that would trigger slow playback of buffered harmonic content.

Ten | 8:39 - 9:53

- Maximum Accumulation: 20000

- Fiery, searing harmonic tones captured and held

- Granular synthesis will regurgitate — exploit this

- Finish with a rich cluster of feedback and frozen sounds

State ten concludes the piece and focuses on sustaining sounds created by Daryl. The granular synthesis, feedback and spectral freezer are the only modules active. Each of these is harder to interact with in a direct fashion due to the way that they process sounds. Because they all focus on elongating different aspects of what Daryl is doing, they appear to be more autonomous and decoupled from him. This creates a state that is suspended in time and is only concluded when Daryl stops playing.

Reflection

Refracted Touch was an enjoyable piece to workshop and put together in the short time that I had with Daryl. Despite the creative interactions it afforded, I think that many of the circumstances surrounding its creation made it difficult to compose, primarily because the design and experimentation in Max was highly speculative in terms of what Daryl could or might do in an improvisation. I was also testing entirely with samples that did not necessarily reflect the realities of live, real-time signal input. For Daryl and me, there were time constraints to adhere to, since Daryl had other pieces to learn and perform in the short window of time leading up to the performance. Because of these constraints, the patch was designed as much as possible, to mitigate failure and guarantee musical success in the performance. This is perhaps antithetical to the openness of live interactive electronics, and this tension was at times difficult to deal with.

Speaking from a position of reflection at the end of this PhD, I also believe that my compositional workflow is generally dependent on going through many stages of testing and failure in collaboration with the computer, in order to lead to better results and to realise what the piece actually is. In Stitch/Strata it was through the challenges of balancing my agency and that of the computer that I eventually settled into a workflow where I was able to combine computer-generated outputs and my intuition in creative decision making. Similarly, Annealing Strategies was successful because I was able to generate numerous outputs and select one that I found most aesthetically pleasing. There were undoubtedly more failed attempts from such a process than successful ones. This kind of iterated workflow with the computer was not feasible for Refracted Touch, and my only way of dealing with compositional materials was to design different sound-producing modules speculatively, or to encourage Daryl to perform specific techniques through the iPad interface. I felt that the former approach had an insufficient effect on the overall piece or was temperamental at times, and the more that I constrained Daryl the less potent the entire notion of an interactive piece was.

I also think that the overall technological approach was too diffuse, and that I tried to incorporate too many sonic elements into the piece. In hindsight, I would have reduced the number of modules to a subset that was more flexible, rather than having each module perform a specific musical role. Although not a conscious decision, I believe that since I had limited time to rehearse and experiment in person with Daryl, I created a patch where I could remove modules if I needed to, rather than committing entirely to a smaller set of options that possessed more importance in the overall function of the computer system. After hearing the piece in performance and rehearsals, I think that the final states (particularly five to ten) offered the most expressive and varied ways for Daryl to interact with the computer, especially over longer time scales and in relationships where the two were not so tightly coupled in their behaviour.

Discrepancies Between Speculation and Reality

A majority of my speculative design methodology for the sound-producing modules was based on their imagined response to and interactions with the pedal steel guitar. As an improvisational piece, many of these specific ideas never came to fruition and Daryl’s style and approach was to explore broad sonic territories and gestural behaviours. In other words, I had implemented many modules that were responsive to aspects of an improvisation I might be interested in, but not necessarily what Daryl would or did do in the performance. For example, one can hear that for the first three states, the onset detection that provokes and controls the electronics does not necessarily align in a meaningful way with the gestures Daryl is performing. This difference largely arose because I was testing this interaction with my own samples, and the way that Daryl improvises is very different from my own style and performative behaviours. What Daryl and I consider to be extremely quiet were different, and while my artistic planning is necessary and fundamental to the creation of the piece, so too is Daryl’s comfort and expression as a performer interacting with the computer. In some cases, these two things did not end up coalescing to form something that was interactively successful. Similarly, certain states ask the performer to use specific techniques or to adhere to constraints such as strings or range. While these are not strict commands, I designed the modules to work with certain materials that I had heard Daryl was able to produce in other recordings found online. Undoubtedly, the natural flow of an improvisation sometimes leads improvisers in unforeseen directions, and at times it would not have made musical sense for Daryl to confine himself to those techniques in the moment. As such, the modules belonging to a certain state sometimes were incompatible with the material that Daryl was exploring at that moment in the improvisation.

These discrepancies emerged in states six, seven, eight and nine, where I ask Daryl to only use the lowest pitched three strings (six, five and four). I planned for him to explore extremely low-frequency material throughout these states, mainly informed by the sounds that I heard him produce in Matthew Sergeant’s piece Lichen (2017) at 2:42. In Refracted Touch, however, states six to ten lasted for almost six minutes and I believe that this was too long and constraining for Daryl, which is why he decided to explore other techniques and areas of his playing. This allowed him to excite and provoke the electronics in ways that I had not planned, such as where high material is juxtaposed against the low, fuzzy and distorted electronics, although this diminished many of the core interactive relationships I had planned and set up.

Final Remarks

After listening to the dress rehearsal and performance recording, I was satisfied with some aspects of the compositional approach. Furthermore, I would not have been able to compose such a piece outside of the interactive live electronics paradigm. Despite these successes, the positive aspects were not controllable or reproducible due to the sheer complexity of how interactive, real-time improvisational decision making occurs, as well as how the sound modules were mostly indeterminate at a micro- and meso- scale and could change each time they were played with.

I came to a similar realisation in this reflection that I had experienced in Stitch/Strata and Annealing Strategies: that harnessing the computer for composition is a sensitive process of balancing my compositional control and the computer’s contribution to the overall creative process. While I want the computer to influence the emergence of musical ideas and sounds, I need to be able to interact with what it does to build on these outputs. For me, being able to store, modify and have ways of incorporating materials in states of varied completeness is essential to this, but Refracted Touch engendered a workflow that was not based on the creation of materials that could be curated like this. Instead, I had to operate through multiple layers of abstraction and distance. Firstly, I had to create modules to generate sound. Secondly, I had to devise mechanisms to organise the behaviour of those modules. Lastly, I had to consider the complex role that a musician would have in this process. Given these issues, the paradigm of live electronics and improvisation was not a path forward from this piece that I believed would have further value in this research.

The next project that is discussed is Reconstruction Error. Reconstruction Error and the final project, Interferences, are particularly important in the set of works for this PhD thesis in that they developed a computer-aided workflow that had more traction than these first three projects, and they spurred on the generation of tools which I have integrated into my practice more deeply.